This is an old revision of the document!

Lab 04 - Networking Monitoring (Linux)

Why is Networking Important?

Having a well-established network has become an important part of our lives. The easiest way to expand your network is to build on the relationships with people you know; family, friends, classmates and colleagues. We are all expanding our networks daily.

Objectives

- Offer an introduction to Network monitoring.

- Get you acquainted with a few Linux standard monitoring tools and their outputs, for monitoring the impact of the Network on the system.

- Provides a set of insights related to understanding networks and connection behavior.

Contents

Introduction

01. Ethernet Configuration Settings

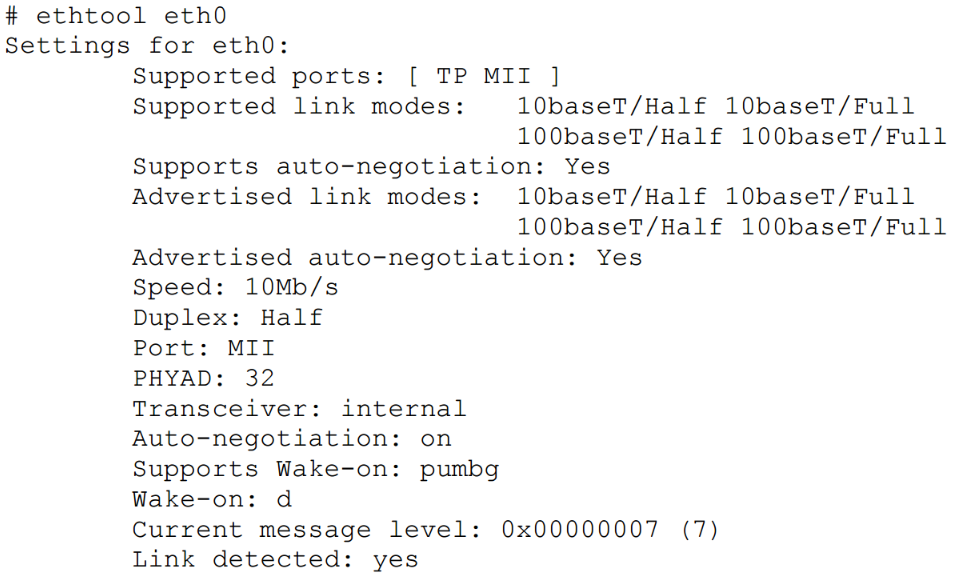

Most enterprise Ethernet networks run at either 100 or 1000BaseTX. Use ethtool to ensure that a specific system is synced at this speed.

In the following example, a system with a 100BaseTX card is running auto negotiated in 10BaseT.

The following command can be used to force the card into 100BaseTX:

# ethtool -s eth0 speed 100 duplex full autoneg off

02. Monitoring Network Throughput

Using iptraf for Local Throughput

Using netperf for Endpoint Throughput

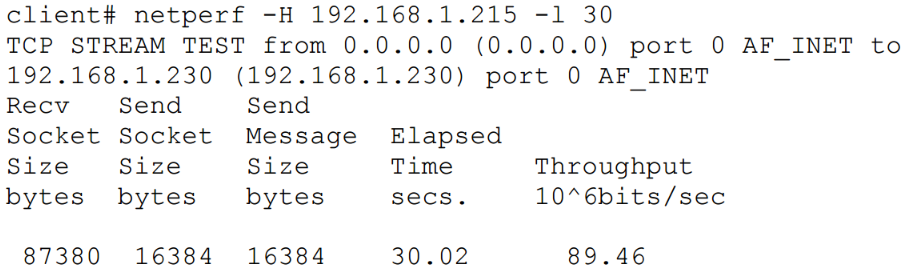

To perform a basic controlled throughput test, the netperf server must be running on the server system (server# netserver).

There are multiple tests that the netperf utility may perform. The most basic test is a standard throughput test. The following test initiated from the client performs a 30 second test of TCP based throughput on a LAN. The output shows that the throughput on the network is around 89 mbps. The server (192.168.1.215) is on the same LAN. This is exceptional performance for a 100 mbps network.

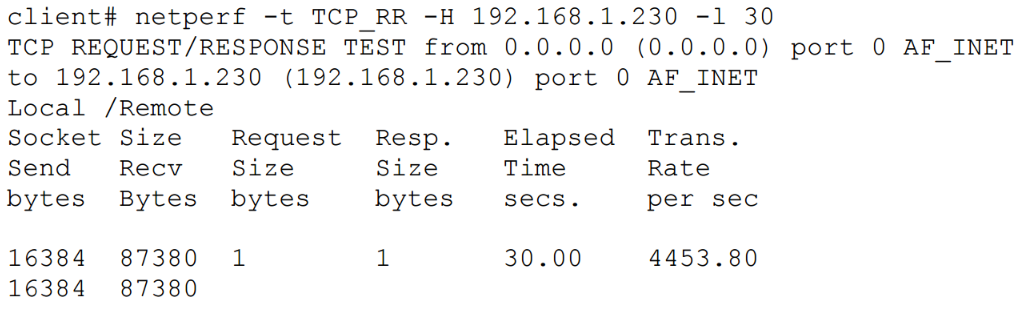

The following example simulates TCP request/response over the duration of 30 seconds.

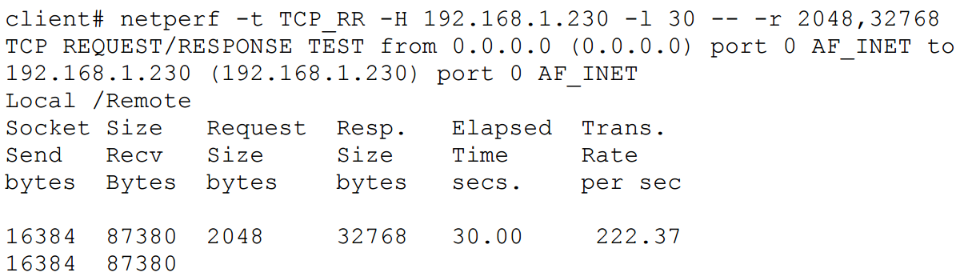

In a more realistic example, a netperf uses a default size of 2K for requests and 32K for responses.

Using iperf to Measure Network Efficiency

03. Individual Connections with tcptrace

- TCP Retransmissions – the amount of packets that needed to be sent again and the total data size

- TCP Window Sizes – identify slow connections with small window sizes

- Total throughput of the connection

- Connection duration

For more information refer to pages 34-37 from Darren Hoch’s Linux System and Performance Monitoring.

04. TCP and UDP measurments

- This issue is all too common and it has nothing to do with the network.

TCP measurements: throughput, bandwidth

- Capacity: link speed

- Narrow link: link with the lowest capacity along a path

- Capacity of the end-to-end path: capacity of the narrow link

- Utilized bandwidth: current traffic load

- Available bandwidth: capacity – utilized bandwidth

- Tight link: link with the least available bandwidth in a path

- Achievable bandwidth: includes protocol and host issues

- Many things can limit TCP throughput:

- Loss

- Congestion

- Buffer Starvation

- Out of order delivery

TCP performance: window size

- In data transmission, TCP sends a certain amount of data and then pauses;

- To ensure proper delivery of data, it doesn't send more until it receives an acknowledgment from the remote host;

TCP performance: Bandwith Delay Product (BDP)

- The further away the two hosts, the longer it takes for the sender to receive the acknowledgment from the remote host, reducing overall throughput.

- To overcome BDP, we send more data at a time ⇒ we adjust the TCP Window. Telling TCP to send more data per flow than the default parameters.

TCP performance: parallel streams - read/write buffer size

- TCP breaks the stream into pieces transparently

- Longer writes often improve performance

- Let TCP “do it’s thing”

- Fewer system calls

- How?

- -l <size> (lower case ell)

- Example –l 128K

- UDP doesn’t break up writes, don’t exceed Path MTU

- The –P option sets the number of streams to use

UDP measurements

- Loss

- Jitter

- Out of order delivery

- Use -b to specify target bandwidth (default is 1M)

Good to know:

- Check to make sure all Ethernet interfaces are running at proper rates.

- Check total throughput per network interface and be sure it is inline with network speeds.

- Monitor network traffic types to ensure that the appropriate traffic has precedence on the system.

Tasks

01. [10p] Memory usage

Open ex01.py and take a look at the code. What is the difference between the methods do_append() and do_allocate()?

Use vmstat to monitor the memory usage while performing the following experiments. In the main method, call:

- The do_append method on it's own (see Experiment 1).

- The do_allocate method on it's own (see Experiment 2).

- Both methods as shown in the Experiment 3 area in the code.

- Both methods as shown in the Experiment 4 area in the code.

Offer an interpretation for the obtained results and change the code in do_append() so that the memory usage will be the same when performing Experiment 3 or Experiment 4.

HINTS: Python GC; Reference cycle

02. [20p] Swap space

[10p] Task A - Swap File

First, let us check what swap devices we have enabled. Check the NAME and SIZE columns of the following command:

$ swapon --show

No output means that there are no swap devices available.

If you ever installed a Linux distro, you may remember creating a separate swap partition. This, however, is only one method of creating swap space. The other is by adding a swap file. Run the following commands:

$ sudo swapoff -a $ sudo dd if=/dev/zero of=/swapfile bs=1024 count=$((4 * 1024 * 1024)) $ sudo chmod 600 /swapfile $ sudo mkswap /swapfile $ sudo swapon /swapfile $ swapon --show

Just to clarify what we did:

- disabled all swap devices

- created a 4Gb zero-initialized file

- set the permission to the file so only root can edit it

- created a swap area from the file using mkswap (works on devices too)

- activated the swap area

The new swap area is temporary and will not survive a reboot. To make it permanent, we need to register it in /etc/fstab by adding a line such as this:

/swapfile swap swap defaults 0 0

[10p] Task B - Does it work?

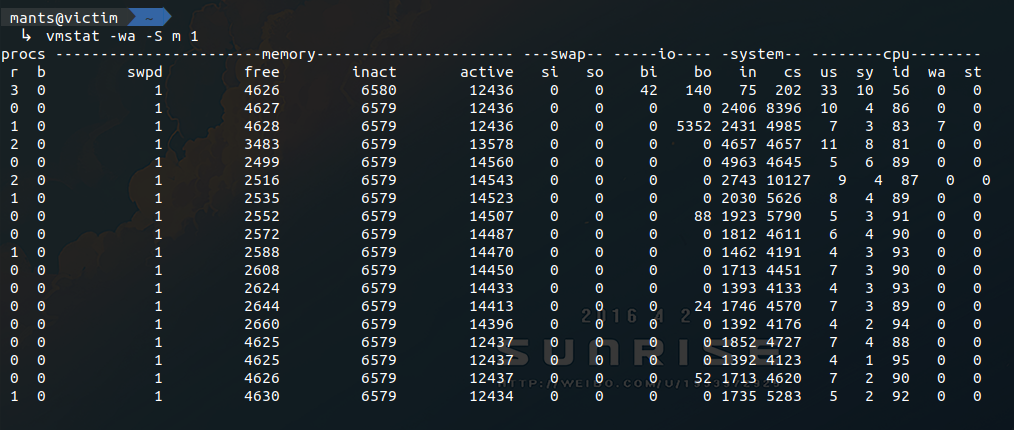

In one terminal run vmstat and look at the swpd and free columns.

$ vmstat -w 1

In another terminal, open a python shell and allocate a bit more memory than the available RAM. Identify the moment when the newly created swap space is being used.

One thing you might notice is that the value in vmstat's free column is lower than before. This does not mean that you have less available RAM after creating the swap file. Remember using the dd command to create a 4GB file? A big chunk of RAM was used to buffer the data that was written to disk. If free drops to unacceptable levels, the kernel will make sure to reclaim some of this buffer/cache memory. To get a clear view of how much available memory you actually have, try running the following command:

$ free -h

Observe that once you close the python shell and the memory is freed, swpd still displays a non-zero value. Why? There simply isn't a reason to clear the data from the swap area. If you really want to clean up the used swap space, try the following:

$ vmstat $ sudo swapoff -a && sudo swapon -a $ vmstat

Create two swap files. Set their priorities to 10 and 20, respectively.

Include the commands (copy+paste) or a screenshot of the terminal.

Also add 2 advantages and disadvantages when using a swap file comparing with a swap partition.

03. [30p] Kernel Samepage Merging

KSM is a page de-duplication strategy introduced in kernel version 2.6.32. In case you are wondering, it's not the same thing as the file page cache. KSM was originally developed in tandem with KVM in order to detect data pages with exactly the same content and make their page table entries point to the same physical address (marked Copy-On-Write.) The end goal was to allow more VMs to run on the same host. Since each page must be scanned for identical content, this solution had no chance of scaling well with the available quantity of RAM. So, the developers compromised to scan only with the private anonymous pages that were marked as likely candidates via madvise(addr, length, MADV_MERGEABLE).

Download the skeleton for this task.

[10p] Task A - Check kernel support & enable ksmd

First things first, you need to verify that KSM was enabled during your kernel's compilation. For this, you need to check the Linux build configuration file. Hopefully, you should see something like this:

# on Ubuntu you can usually find it in your /boot partition $ grep CONFIG_KSM /boot/config-$(uname -r) CONFIG_KSM=y # otherwise, you can find a gzip compressed copy in /proc $ zcat /proc/config.gz | grep CONFIG_KSM CONFIG_KSM=y

If you don't have KSM enabled, you could recompile the kernel with the CONFIG_KSM flag and try it, but you don't have to :)

Moving forward. Next thing on the list is to check that the ksmd daemon is functioning. Any configuration that we'll do will be through the sysfs files in /sys/kernel/mm/ksm. Consequently, you should change user to root (even sudo should not allow you to write to these files.)

- /…/run : this is 1 if the daemon is active; write 1 to it if it's not

- /…/pages_to_scan : this is how many pages will be scanned before going to sleep; you can increase this to 1000 if you want to see faster results

- /…/sleep_millisecs : this is how many ms the daemon sleeps in between scans; since you've modified pages_to_scan, you can leave this be

- /…/max_page_sharing : this is the maximum number of pages that can be de-duplicated; in cases like this it's better to go big or go home; so set it to something like 1000000, just to be sure

There are a few more files in the ksm/ directory. We will still use one or two later on. But for now, configuring the previous ones should be enough. Google the rest if you're interested.

[10p] Task B - Watch the magic happen

For this step it would be better to have a few terminals open. First, let's start a vmstat. Keep your eyes on the active memory column when we run the sample program.

$ vmstat -wa -S m 1

Next would be a good time to introduce two more files from the ksm/ sysfs directory:

- /…/pages_shared : this file reports how many physical pages are in use at the moment

- /…/pages_sharing : this file reports how many virtual page table entries point to the aforementioned physical pages

For this experiment we will also want to monitor the number of de-duplicated virtual pages, so have at it:

$ watch -n 0 cat /sys/kernel/mm/ksm/pages_sharing

Finally, look at the provided code, compile it, and launch the program. As an argument you will need to provide the number of pages that will be allocated and initialized with the same value. Note that not all pages will be de-duplicated instantly. So keep in mind your system's RAM limitations before deciding how much you can spare (1-2GB should be ok, right?)

The result should look something like Figure 1:

If you ever want to make use of this in your own experiments, remember to adjust the configurations of ksmd. Waking too often or scanning to many pages at once could end up doing more harm than good. See what works for your particular system.

Include a screenshot with the same output as the one in the spoiler above.

Edit the screenshot or note in writing at what point you started the application, where it reached max memory usage, the interval where KSM daemon was doing its job (in the 10s sleep interval) and where the process died.

[10p] Task C - Plot results

Now that you’ve observed the effects of KSM using vmstat, it’s time to visualize them. Generate a real-time plot that shows free memory, used memory, and memory used as a buffer over time, based on the freemem column from the output of the vmstat command. The plot should dynamically adjust the axis ranges based on the data. The x-axis should represent time, and the y-axis should represent the amount of free memory. The plot should update in real-time as new data is collected.

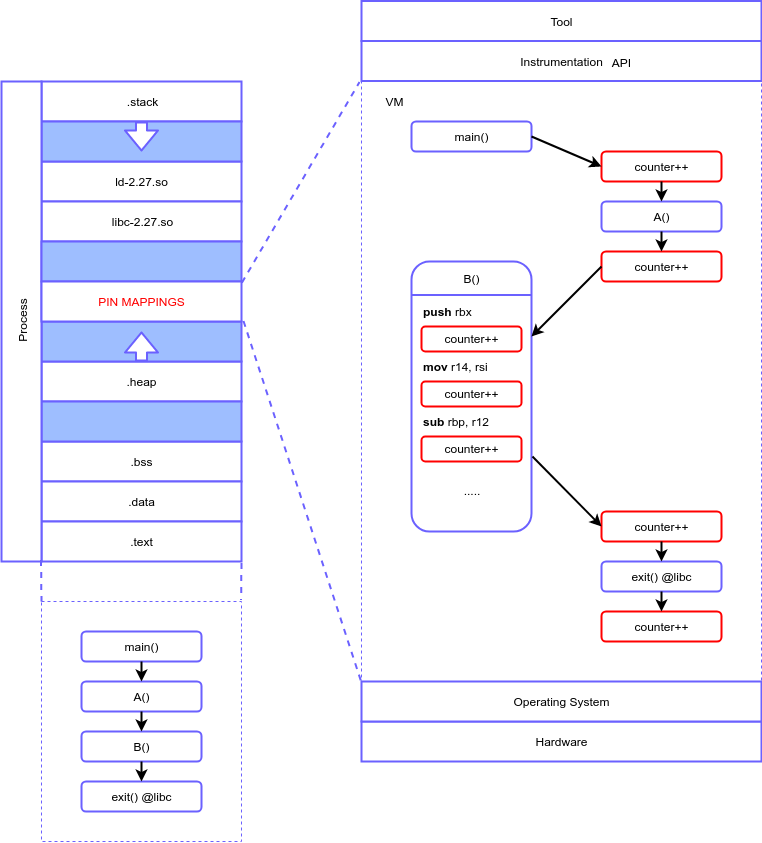

04. [40p] Intel PIN

Broadly speaking, binary analysis is of two types:

- Static analysis - used in an offline environment to understand how a program works without actually running it.

- Dynamic analysis - applied to a running process in order to highlight interesting behavior, bugs or performance issues.

In case you are still wondering, in this exercise we are going to look at (one of) the best dynamic analysis tools available: Intel Pin. Specifically, what Pin does is called program instrumentation, meaning that it inserts user-defined code at arbitrary locations in the executable. The code is inserted at runtime, meaning that Pin can attach itself to a process, just like gdb.

Although Pin is closed source, the concepts that serve as its fundament are described in this paper. Since we don't have time to scan through the whole material, we will offer a bird's eye view of its architecture. Just enough to get you started with the tasks.

When a process is started via Pin, the very first instruction is intercepted and new mappings are created in the virtual space of the process. These mappings contain libraries that Pin uses, the tool that the user wrote (which is compiled as a shared object) and a small sandbox that will act as a VM. During the execution, Pin will translate the original instructions into the sandbox on an as-needed basis and, according to the rules defined in the tool, insert arbitrary code. This code can be inserted at different levels of granularity:

- instruction

- basic block

- function

- image

The immediate advantages should be clear. Only from a performance evaluation standpoint, a few applications could be:

- obtaining metrics from programs that were not designed with this in mind

- hotpatching bugs without stopping the process

- detecting the most accessed code regions to prioritize manual optimization

Although this sounds great, we should not ignore some of the glaring disadvantages:

- overhead

- this is highly dependent on the amount of instrumentation and the instrumented code itself

- overall, this seems to have a bit more impact on ARM than on other architectures

- volatile

- remember that the instrumented code shares things like the virtual memory space and file descriptors with the original process

- while something like in-memory fuzzing is possible, the risk of breaking the process is very high

- limited use cases

- Pin works directly on a regular executable (with native bytecode)

- Pin will not work (as intended) on interpreted languages and variations of these

In case you are wondering what else you can do with Intel Pin, check out TaintInduce. The authors of this paper wrote an architecture agnostic taint analysis tool that successfully found 24 CVEs, 17 missing or wrongly emulated instructions in unicorn and 1 mistake in the Intel Developer Manual.

For reference, use the Intel Pin User Guide (also contains examples).

[5p] Task A - Setup

In this tutorial we will build a Pin tool with the goal of instrumenting any memory reads/writes. For reads, we output the source buffer state before the operation takes place. For writes, we output the destination buffer states both before and after.

Download the skeleton for this task. First thing you will need to do is run setup.sh. This will download the Intel Pin framework into the newly created third_party/ directory.

Next, open src/minspect.cpp in an editor of your choice, but avoid modifying the code. In between tasks, we will apply diff patches to this file. This will allow us to gradually build our tool and observe its behavior at different stages during its development. However, altering the source in any significant manner may cause the patch to fail.

Let us apply the first patch before proceeding to the following task:

$ patch src/minspect.cpp patches/Task-A.patch

[10p] Task B - Instrumentation Callbacks

Looking at main(), most Pin API calls are self explanatory. The only one that we're interested in is the following:

INS_AddInstrumentFunction(ins_instrum, NULL);

This call instructs Pin to trap on each instruction in the binary and invoke ins_instrum(). However, this happens only once per instruction. The role of the instrumentation callback that we register is to decide if a certain instruction is of interest to us. “Of interest” can mean basically anything. We can pick and choose “interesting” instructions based on their class, registers / memory operands, functions or objects containing them, etc.

Let's say that an instruction has indeed passed our selection. Now, we can use another Pin API call to insert an analysis routine before or after said instruction. While the instrumentation routine will never be invoked again for that specific instruction, the analysis routine will execute seamlessly for each pass.

For now, let us observe only the instrumentation callback and leave the analysis routine registration for the following task. Take a look at ins_instrum(). Then, compile the tool and run any program you want with it. Waiting for it to finish is not really necessary. Stop it after a few seconds.

$ make $ ./third_party/pin-3.24/pin -t obj-intel64/minspect.so -- ls -l 1>/dev/null

Just to make sure everything is clear: the default rule for make will generate an obj-intel64/ directory and compile the tool as a shared object. The way to start a process with our tool's instrumentation is by calling the pin util. -t specifies the tool to be used. Everything after -- should be the exact command that would normally be used to start the target process.

Note: here, we output information to stderr from our instrumentation callback. This is not good practice. The Pin tool and the target process share pretty much everything: file descriptors, virtual memory, etc. Normally, you will want to output these things to a log file. However, let's say we can get away with it for now, under the pretext of convenience.

Remember to apply the Task-B.patch before proceeding to the next task.

[10p] Task C - Analysis Callbacks (Read)

Going forward, we got rid of some of the clutter in ins_instrum(). As you may have noticed, the most recent addition to this routine is the for iterating over the memory operands of the instruction. We check whether each operand is the source of a read using INS_MemoryOperandIsRead(). If this check succeeds, we insert an analysis routine before the current instruction using INS_InsertPredicatedCall(). Let's take a closer look at how this API call works:

INS_InsertPredicatedCall( ins, IPOINT_BEFORE, (AFUNPTR) read_analysis, IARG_ADDRINT, ins_addr, IARG_PTR, strdup(ins_disass.c_str()), IARG_MEMORYOP_EA, op_idx, IARG_MEMORYREAD_SIZE, IARG_END);

The first three parameters are:

ins: reference to the INS argument passed to the instrumentation callback by default.IPOINT_BEFORE: instructs to insert the analysis routine before the instruction executes (see Instrumentation arguments for more details.)read_analysis: the function that is to be inserted as the analysis routine.

Next, we pass the arguments for read_analysis(). Each argument is represented by a type macro and the actual value. When we don't have any more parameters to send, we end by specifying IARG_END. Here are all the arguments:

IARG_ADDRINT, ins_addr: a 64-bit integer containing the absolute address of the instruction.IARG_PTR, strdup(ins_disass.c_str()): all objects in the callback's local context will be lost after we return; thus, we need to duplicate the disassembled code's string and pass a pointer to the copy.IARG_MEMORYOP_EA, op_idx: effective address of a specific memory operand; so this argument is not passed by value, but in stead recalculated each time and passed to the analysis routine seamlessly.IARG_MEMORYREAD_SIZE: size in bytes of the memory read; check the documentation for some important exceptions.

Take a look at what read_analysis() does. Recompile the tool and run it again (just as in task B). Finally, apply Task-C.patch and move on to the next task.

[10p] Task D - Analysis Callbacks (Write)

For the memory write analysis routine, we need to add instrumentation both before and after each instruction. The former needs to save the original buffer state while the latter displays the information in its entirety. Assuming that there are more than one memory locations that are written to, we push the initial buffer state hexdumps to a stack. Consequently, we need to add the post-write instrumentation in reverse order to ensure that the succession of elements popped from the stack is correct. Let's take a look at the pre-write instrumentation insertion:

INS_InsertPredicatedCall( ins, IPOINT_BEFORE, (AFUNPTR) pre_write_analysis, IARG_CALL_ORDER, CALL_ORDER_FIRST + op_idx + 1, IARG_MEMORYOP_EA, op_idx, IARG_MEMORYWRITE_SIZE, IARG_END);

We notice a new set of parameters:

IARG_CALL_ORDER, CALL_ORDER_FIRST + op_idx + 1,: specifies the call order when multiple analysis routines are registered; see CALL_ORDER enum's documentation for details.

Recompile the tool. Test to see that the write analysis routines work properly. Apply Task-D.patch and let's move on to applying the finishing touches.

[5p] Task E - Finishing Touches

This is only a minor addition. Namely, we want to add a command line option -i that can be used multiple times to specify multiple image names (e.g.: ls, libc.so.6, etc.) The tool must forego instrumentation for any instruction that is not part of these objects. As such, we declare a Pin KNOB:

static KNOB<string> knob_img(KNOB_MODE_APPEND, "pintool", "i", "", "names of objects to be instrumented for branch logging");

We should not use argp or other alternatives. In stead, let Pin use its own parser for these things. knob_img will act as an accumulator for any argument passed with the flag -i. Observe it's usage in ins_instrum().

Determine the shared object dependencies of your target binary of choice. Then try to recompile and rerun the Pin tool while specifying some of them as arguments.

$ ldd /bin/ls linux-vdso.so.1 (0x00007ffd0d19b000) libgtk3-nocsd.so.0 => /usr/lib/x86_64-linux-gnu/libgtk3-nocsd.so.0 (0x00007f32df3ad000) libselinux.so.1 => /lib/x86_64-linux-gnu/libselinux.so.1 (0x00007f32df185000) libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f32ded94000) libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007f32deb90000) libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007f32de971000) libpcre.so.3 => /lib/x86_64-linux-gnu/libpcre.so.3 (0x00007f32de6ff000) /lib64/ld-linux-x86-64.so.2 (0x00007f32df7d6000)

This concludes the tutorial. The resulting Pin tool can now be used as a starting point for developing a Taint analysis engine. Discuss more with your lab assistant if you're interested.

Patch your way through all the tasks and run the pin tool only for the base object of any binutil.

Include a screenshot of the output.

05. [10p] Feedback

Please take a minute to fill in the feedback form for this lab.