This is an old revision of the document!

Lab 12 - Machine Learning

0. Objectives

- Understand basic concepts of machine learning

- Remember examples of real-world problems that can be solved with machine learning

- Learn the most common performance evaluation metrics for machine learning models

- Analyse the behaviour of typical machine learning algorithms using the most popular techniques

- Be able to compare multiple machine learning models

1. Introduction

Just like chess players improve their technique by watching hundreds of games from top players, computers can be able to perform certain tasks by “looking” at data. These computers are sometimes called “machines” and this data observation step is also known as “learning”. Simple, isn’t it? So let’s define “Machine Learning” (ML) more formally, as stated by Tom Mitchell. A program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E. Let’s get more -h and provide an example. Say that T is the process of playing chess, E is a set of chess matches on which the algorithm was trained and P is the probability for the program to win the next game of chess.

What it’s truly important however, is that machine learning algorithms, unlike traditional ones, are not required to be pre-programmed explicitly to solve a particular task. Just like in the previous example, they learn from the data fed into them and afterwards, are able to predict results for new scenarios. As you might expect, this isn’t just black magic. The majority of ML algorithms are based on some maths and statistics, but also on a fair amount of engineering. ![]()

https://medium.com/@tekaround/train-validation-test-set-in-machine-learning-how-to-understand-6cdd98d4a764

https://medium.com/@tekaround/train-validation-test-set-in-machine-learning-how-to-understand-6cdd98d4a764

2. Supervised Learning vs Unsupervised Learning

Generally, in machine learning, there are two types of tasks: supervised and unsupervised.

A. Supervised Learning

We refer to the former when we have prior knowledge of what the output of the system should look like. More formally, after going through lots of (X, y) pairs, the model should be able to determine that mapping function ĥ which approximates f(X)=y as accurately as possible. In machine learning, the entire set of (X, y) pairs is often called corpus or dataset and an algorithm that learned from this corpus is sometimes called a trained model or simply a model.

For example, let’s say that you want to build a smart car selling platform and you want to provide sellers with the ability to know what should be a “fair price” based on the specs and age of their cars. For that, you would need to provide your model with a set of tuples that might look like this:

(bhp, fuel_type, displacement, weight, torque, suspension, car_age, car_price)

In this case, X is formed from the tuple slices (bhp, fuel_type, displacement, weight, torque, suspension, car_age) and each slice component is called a feature. Likewise, y is represented by the car_price and it's usually called label or ground truth. After learning from the provided corpus, a process that is commonly called model fitting, our model should be able to predict an approximation of the car price ŷ for new ages and specs X.

https://bigdata-madesimple.com/machine-learning-explained-understanding-supervised-unsupervised-and-reinforcement-learning/

https://bigdata-madesimple.com/machine-learning-explained-understanding-supervised-unsupervised-and-reinforcement-learning/

Because a numerical or continuous output is expected as a result, the aforementioned scenario is an example of a regression task. If y was a label that can take the values “cheap”, “fair” or “expensive”, then that would be called a classification task and y can also be referred to as a class.

https://towardsdatascience.com/do-you-know-how-to-choose-the-right-machine-learning-algorithm-among-7-different-types-295d0b0c7f60

https://towardsdatascience.com/do-you-know-how-to-choose-the-right-machine-learning-algorithm-among-7-different-types-295d0b0c7f60

B. Unsupervised Learning

In the case of unsupervised learning, the training data is not labeled anymore, so the machine learning algorithm must take decisions without having a ground truth as reference. That means that your dataset is no longer made out of (X, y) pairs, but only of X entries. Consequently, the job of the model would be to provide meaningful insights about those X entries, by finding various patterns in the data.

https://medium.com/mlrecipies/supervised-learning-v-s-unsupervised-learning-using-machine-learning-98de75200415

https://medium.com/mlrecipies/supervised-learning-v-s-unsupervised-learning-using-machine-learning-98de75200415

The most common tasks for this learning approach are clustering algorithms. In these type of problems, the model must learn how to group various data together, forming components or clusters. Say that having a table with information about all the Facebook users, you would like to recommend personalized ads for every single person. This sounds like a very difficult task, because there is a huge number of users on the platform. But what if we group users together based on their traits (or features as your Data Scientist within you might say)? Then, we can suggest the same ads bundle to a whole group of users, making the advertising process easier and cheaper.

https://towardsdatascience.com/introduction-to-machine-learning-for-beginners-eed6024fdb08

https://towardsdatascience.com/introduction-to-machine-learning-for-beginners-eed6024fdb08

3. Performance Evaluation in Machine Learning

Generally, with machine learning algorithms, in the early phase of development, the focus is less on the computational resources (RAM, CPU, IO etc.) and more on the ability of the model to generalize well on the data. That means that our algorithm is trained well enough to provide accurate answers for data that has never been seen before (predict a car price for a new car posted on the platform, classify a new vehicle or assign a freshly registered Facebook user to a group). Hence, at first, we try to build a robust and accurate model and then we work towards making it function as computationally inexpensive as possible.

A. Training & Test Sets

Evaluating machine learning algorithms is dependent on the type of problem we are trying to solve. But in most cases, we would want to split the data into a training set and a test set. As the name suggests, the former will be used to actually train the model and the later will be used to verify how well the model generalizes on unseen data. On the most common machine learning tasks, the split ratio ranges from 80-20 to 90-10, depending on the size of the corpus. When a huge amount of data is operated (say millions or billions of entries), a smaller proportion of the dataset is used as a test set (even 1%) because the actual number of test entries are considered sufficiently numerous. For instance, 1% of 10M entries is 100k and that’s generally regarded as a lot of data to test on.

https://data-flair.training/blogs/train-test-set-in-python-ml/

https://data-flair.training/blogs/train-test-set-in-python-ml/

B. Classification Problems

Let’s begin our performance analysis journey by analyzing a classification problem. Let’s suppose that you have a model that was trained to predict whether a given image corresponds to a cat or not. As you already know, this is a supervised learning task in which the model must learn to predict one of two classes - “cat” or “non-cat”. In this case, the model is also called a binary classifier.

Confusion Matrix

You fit the model with your training data, you ask it for predictions on the test set and now you have two results: the ground truth y that was part of your dataset and the predictions ŷ that have just been yielded by your machine learning algorithm. So how do you assess your model in this particular scenario? One way is to build a confusion matrix, like the one below:

https://glassboxmedicine.com/2019/02/17/measuring-performance-the-confusion-matrix/

https://glassboxmedicine.com/2019/02/17/measuring-performance-the-confusion-matrix/

Now don’t get confused (excuse the pun  ). It’s actually quite simple to interpret it. There are 4 possible predictions yielded by your binary classifier:

). It’s actually quite simple to interpret it. There are 4 possible predictions yielded by your binary classifier:

- True Positives (y = ŷ and ŷ=”cat”):

The model identified a cat in the image and in that image it is actually a cat.

- False Positives (y != ŷ and ŷ=”cat”):

The model identified a cat in the image, but there was no cat in the image.

- False Negatives (y != ŷ and ŷ=”non-cat”):

The model did not recognize a cat in the image, but there was a cat in the image.

- True Negatives (y = ŷ and ŷ=”non-cat”):

The model did not recognize a cat in the image and in the image there was no cat indeed.

These 4 scenarios can have different levels of importance, depending on the problem one wants to solve. For a smart antivirus, keeping the number of false negatives as low as possible is crucial, even if that means an increase in the false positives. Naturally, it’s better to have annoying warnings than having your computer infected because the antivirus was unable to identify the threat. But if you don’t necessarily have unusual requirements for you model and you just want to assess its generic performance, there are 3 metrics that can be inferred from this matrix:

Accuracy

This is the most intuitive metric and represents the number of correctly predicted labels over the number of total predictions, the actual value of prediction (positive or negative) being irrelevant. Again, judging the model by this single metric can be misleading. Consider the case of credit card transaction frauds. There could be a lot of transactions that are perfectly valid, but only a handful of them are frauds. A model that trains on this data could learn to predict that all of the transactions are safe and the ones that are fraudulent are just outliers. Calculating the accuracy on a dataset of 1 million transactions would yield 99.9%. But our model is useless, because letting 1000 frauds unnoticed cannot be allowed.

Precision

The precision is the total number of correctly classified positive examples divided by the total number of predicted positive examples. If the precision is very high, the probability for our model of classifying non-cat images as cat images is quite low.

Recall

The recall is the total number of correctly classified positive examples divided by the total number of actual positive examples. If the recall is very high, the probability for our model of misclassifying cat images is quite low.

When you have high recall and low precision, most of the cat images are correctly recognized, but there are a lot of false positives. In contrast, when you have low recall and high precision, we miss a lot of cat images, but those predicted as cat images have a high probability of being indeed cat images and not something else.

https://towardsdatascience.com/understanding-confusion-matrix-a9ad42dcfd62

https://towardsdatascience.com/understanding-confusion-matrix-a9ad42dcfd62

F1 Score

Ideally, you would want to have both high recall and high precision, but that is not always possible. So you can choose the trade-off you are most comfortable with or you can combine the 2 metrics into 1, by using the F1 score, which is actually the harmonic mean of the 2:

This single metric is more generic, goes from 0 (worst) to 1 (best) and together with accuracy, can give you a solid intuition on the performance of your model.

Generic Confusion Matrix

Don’t take the terms “positive” and “negative” written in the confusion matrix above literally! They are actually placeholders for the 2 classes (“cat” and “non-cat”) the model is trying to predict. This means that you can use more generic classes like “cat” and “dog” or “human” and “animal”, depending on the problem you want to solve. Moreover, the confusion matrix can be generalized to more than 2 classes (see the image below), having the same metrics and ways of computing them.

https://dev.to/overrideveloper/understanding-the-confusion-matrix-264i

https://dev.to/overrideveloper/understanding-the-confusion-matrix-264i

C. Regression Problems

As it was previously mentioned, supervised machine learning can also imply solving regression tasks, where a numerical or continuous value is used as a label. Let’s take an example. Suppose that you want to predict the budget for an advertisement campaign, based on the revenue of the company. For this task, your model will learn from a training set of (X, y) pairs and will try to find the best ĥ that approximates the behaviour of the function f(X) = y. If we assume that the relationship between the two is linear, your model must learn how to draw a line that fits the points (X, y) the best. A visual representation can be seen in the figure below:

https://stackabuse.com/multiple-linear-regression-with-python/

https://stackabuse.com/multiple-linear-regression-with-python/

Root Mean Squared Error

But how do we know if that line is drawn correctly? Well, first we must define an error or loss function that can mathematically indicate us how far from the points the line has been drawn. In linear regression tasks, the most common approach is to use the root mean squared error (RMSE), depicted below. You can sometimes get rid of the root and just compute the MSE to avoid extra computations.

In this formula, yj is the ground truth and ŷj is the result of the partially learned function ĥ(Xj). Basically, the model must apply successive corrections to ĥ, such that the predicted ŷj values lead to a smaller RMSE. This process of minimizing the loss is also called optimization and is one of the foundational principles of machine learning. You can find more about it here.

Similarly to the classification tasks, for regression problems, the value of the RMSE can be used as a performance metric for the model.

R² Correlation

Now let’s say that your model was properly trained and you have some predicted labels ŷ and some ground truth values y. In the case of classification problems, with these two pieces of information you could immediately compute the F1 score or the accuracy. But with regression, a 0 to 1 score cannot be simply derived. One metric that can be used however, is the R2 correlation and it is computed using the following formula:

where ŷj is the predicted value, ÿj is the mean of the ground truth labels and yj is the ground truth. This score lies between -∞ and 1 and has the following interpretation:

- Close to 1: high positive linear correlation between X and y

- Close to 0: a linear correlation between X and y cannot be identified

- Close to -∞: high negative linear correlation between X and y

These 3 scenarios are visually represented in the figures below:

https://statistics.laerd.com/stata-tutorials/pearsons-correlation-using-stata.php

https://statistics.laerd.com/stata-tutorials/pearsons-correlation-using-stata.php

D. Underfitting vs Overfitting

Splitting the data into a training set and a test set is not only helping us to determine the accuracy or error of the model’s predictions, but can also give us an insight about its behaviour or generalization capabilities.

Let’s take a trained binary classifier as an example and the accuracy as a performance metric. If it has a small test score, but a high training score, the model relied too much on the training data and is not able to generalize well on entries that has never seen before. In this case, we say that it has overfit the training data. In this particular case, the model is said to have a high variance. In contrast, if the model learned a function that is too generic, the problem of underfitting or high bias occurs. This time, the problem can be inferred from a training score that is too small. These situations were visually represented in the figures below:

https://medium.com/greyatom/what-is-underfitting-and-overfitting-in-machine-learning-and-how-to-deal-with-it-6803a989c76

https://medium.com/greyatom/what-is-underfitting-and-overfitting-in-machine-learning-and-how-to-deal-with-it-6803a989c76

The underlying causes of the aforementioned problems depend heavily on the model and how its hyperparameters were fine-tuned. Discussing them is outside the scope of this laboratory class, but one can learn more about those topics from these articles: Underfitting & Overfitting, Hyperparameters.

E. Clustering Algorithms

In the end, we should talk a little bit about unsupervised learning. As you already know, for such tasks, there is no ground truth - just a set of X values that might have some underlying structure or pattern that can be learned. Evaluating a model without having something as reference might seem a pretty difficult task. And in some scenarios, you might be right!

Say that we wanted to group images containing handwritten digits into clusters and at the end of the learning process, our model grouped the data like this:

https://towardsdatascience.com/graduating-in-gans-going-from-understanding-generative-adversarial-networks-to-running-your-own-39804c283399

https://towardsdatascience.com/graduating-in-gans-going-from-understanding-generative-adversarial-networks-to-running-your-own-39804c283399

At first glance, the clustering outcome looks good, but how can we express this “good-looking” result in a more formal manner? One solution is to measure how compact the clusters are, yet distant from one another, by computing a silhouette score. Because the formulas are too cumbersome to write in here, I will leave you a link, where everything is explained clearly. However, one might understand the concept by looking at this simple example:

http://blog.data-miners.com/2011/03/cluster-silhouettes.html

http://blog.data-miners.com/2011/03/cluster-silhouettes.html

Exercises

The exercises will be solved in Python, using various popular libraries that are usually integrated in machine learning projects:

- Scikit-Learn: fast model development, performance metrics, pipelines, dataset splitting

- Pandas: data frames, csv parser, data analysis

- NumPy: scientific computation

- Matplotlib: data plotting

All tasks are tutorial based and every exercise will be associated with at least one “TODO” within the code. Those tasks can be found in the exercises package, but our recommendation is to follow the entire skeleton code for a better understanding of the concepts presented in this laboratory class. Each functionality is properly documented and for some exercises, there are also hints placed in the code.

- ImportError: cannot import name 'main'

$ sudo su $ pip install ...

- AttributeError: 'module' object has no attribute 'SSL_ST_INIT'

$ sudo python -m easy_install --upgrade pyOpenSSL

MATPLOTLIB TROUBLESHOOTING:

- ImportError: No module named '_tkinter', please install the python3-tk package

$ sudo apt-get install python3-tk # ignore errors

Exercise 0

Fill out the feedback form for this course at https://acs.curs.pub.ro

(0.5p) Exercise 1

- Download the skeleton: 12._lab_ml.zip

- Make sure Python 3 is installed:

sudo apt-get update sudo apt-get install python3.6

- Make sure pip and virtualenv are installed:

sudo apt-get install python3-pip sudo pip3 install virtualenv

- Create a Python 3 virtual environment in the skeleton directory:

virtualenv venv

- Activate your Python 3 virtual environment:

source venv/bin/activate

- Install all the necessary dependencies specified in the requirements.txt file:

pip3 install -r requirements.txt

deactivate

We usually code within a virtual environment because it's very simple to separate the dependencies between projects.

Exercise 2

In this exercise, you will learn how to properly evaluate a classifier. We chose a decision tree for this example, but feel free to explore other alternatives. You can find out more about decision trees here. For all the associated tasks, you will use the diabetes.csv file placed in the resources directory. The model must learn to determine whether the patient suffers from diabetes (0 or 1) by looking at a dataset consisted of the following features:

- Number of pregnancies

- Glucose level

- Blood pressure

- Skin thickness

- Insulin level

- Body Mass Index (BMI)

- Diabetes pedigree function (likelihood of diabetes based on family history)

- Age

The solution for this exercise should be written in the TODO sections marked in the classification.py file.

(0.5p) Task A

Follow the skeleton code and understand what it does. Afterwards, you must be able to answer the assistant's questions.

(1.0p) Task B

Evaluate the classifier by manually computing the accuracy, precision, recall and F1 score. These metrics are derived from the confusion matrix but you don't have to build this matrix yourself. You can use Scikit-learn for that (Confusion Matrix).

(1.0p) Task C

Evaluate the classifier using the metrics package from the Scikit-learn library. Again, accuracy, precision, recall and F1 score are required.

(1.0p) Task D

Extend the solution for Task B so that it can accommodate more than 2 classes (if you haven't done that already). Test your performance evaluation functions by using the diabetes_multi.csv file as input.

(0.5p) Task E

Comment the difference in score values between the binary classifier and the multiclass classifier. Again, you must be able to answer the assistant's questions.

Exercise 3

In this exercise, you will learn how to properly evaluate a regression model. We chose a simple linear regressor for this example, but feel free to explore other alternatives. You can find out more about linear regressors here. For all the associated tasks, you will use the weather.csv file placed in the resources directory. The model must learn to determine what is the maximum temperature for a certain day (y) based on the minimum temperature (X).

The solution for this exercise should be written in the TODO sections marked in the regression.py file.

(0.5p) Task A

Follow the skeleton code and understand what it does. Afterwards, you must be able to answer the assistant's questions.

(1.0p) Task B

Evaluate the regressor by manually computing the Root Mean Squared Error (RMSE), Mean Absolute Error (MAE) and R² score.

(1.0p) Task C

Evaluate the regressor using the metrics package from the Scikit-learn library. Again, RMSE, MAE and R² score are required.

(1.0p) Task D (Bonus)

Train the model on variously sized chunks of the original dataset and notice how the RMSE changes. To better illustrate the behaviour, you should build a plot having the data size on the X axis and the RMSE value on the Y axis. Moreover, you should be able to explain the observed behaviour to the assistant.

Exercise 4

In this exercise, you will learn how to properly evaluate the data fitting behaviour of the binary classifier from exercise 2. For all the associated tasks, you will use the diabetes.csv file again, which is placed in the same resources directory. This time, 3 models will be trained with different parameters and your job is to analyse their behaviour. For that, you will look at the accuracy of each model computed on both the training set and the test set.

The solution for this exercise should be written in the TODO sections marked in the fitting.py file.

(1.0p) Task A

For each model, make predictions on both the training set and test set and compute the corresponding accuracy values.

(0.5p) Task B

Comment the results by specifying which is the best model in terms of fitting and which are the models that overfit or underfit the dataset.

Exercise 5

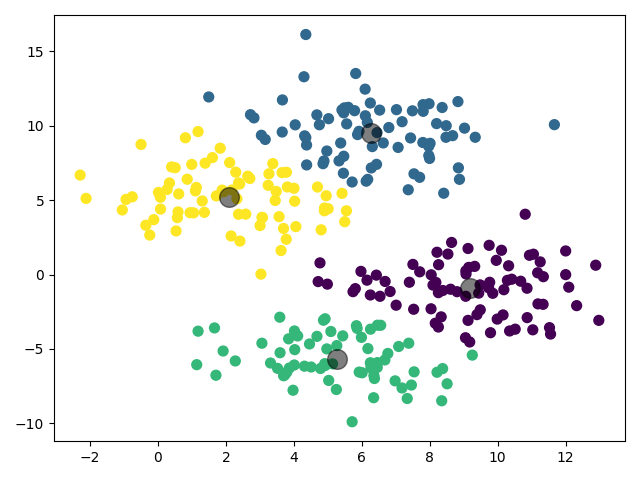

In this exercise, you will learn how to properly evaluate a clustering model. We chose a K-means clustering algorithm for this example, but feel free to explore other alternatives. You can find out more about K-means clustering algorithms here. For all the associated tasks, you don't have to use any input file, because the clusters are generated in the skeleton. The model must learn how to group together points in a 2D space.

The solution for this exercise should be written in the TODO sections marked in the clustering.py file.

Task A

Compute the silhouette score of the model by using a Scikit-learn function found in the metrics package.

Task B

Fetch the centres of the clusters (the model should already have them ready for you ![]() ) and plot them together with a colourful 2D representation of the data groups. Your plot should look similar to the one below:

) and plot them together with a colourful 2D representation of the data groups. Your plot should look similar to the one below:

You can also play around with the standard deviation of the generated blobs and observe the different outcomes of the clustering algorithm. You should be able to discuss these observations with the assistant.

References

Feedback

Please take a minute to fill in the feedback form for this lab.