This is an old revision of the document!

Lab 05 - I/O Monitoring (Linux)

Objectives

- Offer an introduction to I/O monitoring.

- Get you acquainted with a few linux standard monitoring tools and their outputs, for monitoring the impact of the I/Os on the system.

- Gives an intuition to be able to compare two relatively similar systems, but I/O different.

Contents

Proof of Work

Before you start, create a Google Doc. Here, you will add screenshots / code snippets / comments for each exercise. Whatever you decide to include, it must prove that you managed to solve the given task (so don't show just the output, but how you obtained it and what conclusion can be drawn from it). If you decide to complete the feedback for bonus points, include a screenshot with the form submission confirmation, but not with its contents.

When done, export the document as a pdf and upload in the appropriate assignment on moodle. The deadline is 23:55 on Friday.

Introduction

01. Reading and Writing Data - Memory Pages

The Linux kernel breaks disk I/O into pages. The default page size on most Linux systems is 4K. It reads and writes disk blocks in and out of memory in 4K page sizes. You can check the page size of your system by using the time command in verbose mode and searching for the page size:

# getconf PAGESIZE

02. Major and Minor Page Faults

Linux, like most UNIX systems, uses a virtual memory layer that maps into physical address space. This mapping is “on-demand” in the sense that when a process starts, the kernel only maps what is required. When an application starts, the kernel searches the CPU caches and then physical memory. If the data does not exist in either, the kernel issues a Major Page Fault (MPF). A MPF is a request to the disk subsystem to retrieve pages of the disk and buffer them in RAM.

Once memory pages are mapped into the buffer cache, the kernel will attempt to use these pages resulting in a Minor Page Fault (MnPF). A MnPF saves the kernel time by reusing a page in memory as opposed to placing it back on the disk.

# /usr/bin/time –v evolution

As an alternative, a more elegant solution for a specific pid is:

# ps -o min_flt,maj_flt ${pid}

03. The File Buffer Cache

The file buffer cache is used by the kernel to minimise MPFs and maximise MnPFs. As a system generates I/O over time, this buffer cache will continue to grow as the system will leave these pages in memory until memory gets low and the kernel needs to “free” some of these pages for other uses. The result is that many system administrators see low amounts of free memory and become concerned when in reality, the system is just making good use of its caches ![]()

04. Types of Memory Pages

There are 3 types of memory pages in the Linux kernel:

- Read Pages – Pages of data read in via disk (MPF) that are read only and backed on disk. These pages exist in the Buffer Cache and include static files, binaries, and libraries that do not change. The Kernel will continue to page these into memory as it needs them. If the system becomes short on memory, the kernel will “steal” these pages and place them back on the free list causing an application to have to MPF to bring them back in.

- Dirty Pages – Pages of data that have been modified by the kernel while in memory. These pages need to be synced back to disk at some point by the pdflush daemon. In the event of a memory shortage, kswapd (along with pdflush) will write these pages to disk in order to make room in memory.

- Anonymous Pages – Pages of data that do belong to a process, but do not have any file or backing store associated with them. They can't be synchronised back to disk. In the event of a memory shortage, kswapd writes these to the swap device as temporary storage until more RAM is free (“swapping” pages).

05. Writing Data Pages Back to Disk

Applications themselves may choose to write dirty pages back to disk immediately using the fsync() or sync() system calls. These system calls issue a direct request to the I/O scheduler. If an application does not invoke these system calls, the pdflush kernel daemon runs at periodic intervals and writes pages back to disk.

Monitoring I/O

Calculating IOs Per Second

Every I/O request to a disk takes a certain amount of time. This is due primarily to the fact that a disk must spin and a head must seek. The spinning of a disk is often referred to as “rotational delay” (RD ![]() ) and the moving of the head as a “disk seek” (DS). The time it takes for each I/O request is calculated by adding DS and RD. A disk's RD is fixed based on the RPM of the drive. An RD is considered half a revolution around a disk.

) and the moving of the head as a “disk seek” (DS). The time it takes for each I/O request is calculated by adding DS and RD. A disk's RD is fixed based on the RPM of the drive. An RD is considered half a revolution around a disk.

Each time an application issues an I/O, it takes an average of 8MS to service that I/O on a 10K RPM disk. Since this is a fixed time, it is imperative that the disk be as efficient as possible with the time it will spend reading and writing to the disk. The amount of I/O requests is often measured in I/Os Per Second (IOPS). The 10K RPM disk has the ability to push 120 to 150 (burst) IOPS. To measure the effectiveness of IOPS, divide the amount of IOPS by the amount of data read or written for each I/O.

Random vs Sequential I/O

The relevance of KB per I/O depends on the workload of the system. There are two different types of workload categories on a system: sequential and random.

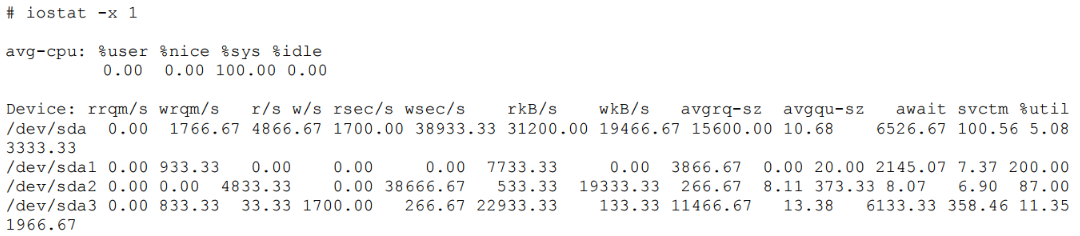

Sequential I/O - The iostat command provides information on IOPS and the amount of data processed during each I/O. Use the –x switch with iostat (iostat –x 1). Sequential workloads require large amounts of data to be read sequentially and at once. These include applications such as enterprise databases executing large queries and streaming media services capturing data. With sequential workloads, the KB per I/O ratio should be high. Sequential workload performance relies on the ability to move large amounts of data as fast as possible. If each I/O costs time, it is imperative to get as much data out of that I/O as possible.

Random I/O - Random access workloads do not depend as much on size of data. They depend primarily on the amount of IOPS a disk can push. Web and mail servers are examples of random access workloads. The I/O requests are rather small. Random access workload relies on how many requests can be processed at once. Therefore, the amount of IOPS the disk can push becomes crucial.

When Virtual Memory Kills I/O

If the system does not have enough RAM to accommodate all requests, it must start to use the SWAP device. As file system I/Os, writes to the SWAP device are just as costly. If the system is extremely deprived of RAM, it is possible that it will create a paging storm to the SWAP disk. If the SWAP device is on the same file system as the data trying to be accessed, the system will enter into contention for the I/O paths. This will cause a complete performance breakdown on the system. If pages can't be read or written to disk, they will stay in RAM longer. If they stay in RAM longer, the kernel will need to free the RAM. The problem is that the I/O channels are so clogged that nothing can be done. This inevitably leads to a kernel panic and crash of the system.

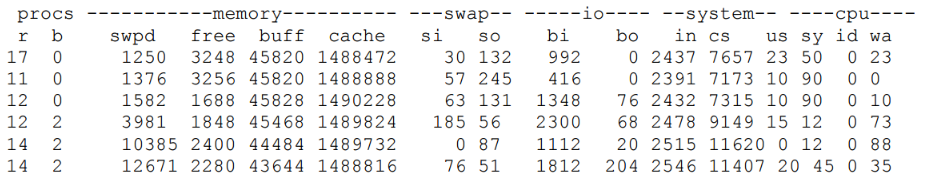

The following vmstat output demonstrates a system under memory distress. It is writing data out to the swap device:

To see the effect the swapping to disk is having on the system, check the swap partition on the drive using iostat.

Takeaways

- Any time the CPU is waiting on I/O, the disks are overloaded.

- Calculate the amount of IOPS your disks can sustain.

- Determine whether your applications require random or sequential disk access.

- Monitor slow disks by comparing wait times and service times.

- Monitor the swap and file system partitions to make sure that virtual memory is not contending for filesystem I/O.

Tasks

01. [10p] Rotational delay - IOPS calculations

- Rotational speed. Measured in RPM, mostly 7,200, 10,000 or 15,000 RPM. A higher rotational speed is associated with a higher-performing disk.

- Average latency. The time it takes for the sector of the disk being accessed to rotate into position under a read/write head.

- Average seek time. The time (in ms) it takes for the hard drive’s read/write head to position itself over the track being read or written.

- Average IOPS: Divide 1 by the sum of the average latency in ms and the average seek time in ms (1 / (average latency in ms + average seek time in ms).

average IOPS = 1 / (average latency in ms + average seek time in ms).

Let's calculate the Rotational Delay - RD for a 10K RPM drive:

- Divide 10000 RPM by 60 seconds:

10000/60 = 166 RPS - Convert 1 of 166 to decimal:

1/166 = 0.006 seconds per Rotation - Multiply the seconds per rotation by 1000 milliseconds (6 MS per rotation).

- Divide the total in half (RD is considered half a revolution around a disk):

6/2 = 3 MS - Add an average of 3 MS for seek time:

3 MS + 3 MS = 6 MS - Add 2 MS for latency (internal transfer):

6 MS + 2 MS = 8 MS - Divide 1000 MS by 8 MS per I/O:

1000/8 = 125 IOPS

[10p] Task A - Calculate rotational delay

Add in your archive the operations and the result you obtained. (Screenshot, picture of calculations made by hand on paper)

Calculate the Rotational Delay, and then the IOPS for a 5400 RPM drive.

02. [30p] iostat & iotop

[15p] Task A - Monitoring the behaviour with Iostat

- -x for extended statistics

- -d to display device stastistics only

- -m for displaying r/w in MB/s

$ iostat -xdm

Use iostat with -p for specific device statistics:

$ iostat -xdm -p sda

- Run iostat -x 1 5.

- Considering the last two outputs provided by the previous command, calculate the efficiency of IOPS for each of them. Does the amount of data written per I/O increase or decrease?

Add in your archive screenshot or pictures of the operations and the result you obtained, also showing the output of iostat from which you took the values.

- Divide the kilobytes read (rkB/s) and written (wkB/s) per second by the reads per second (r/s) and the writes per second (w/s).

- If you happen to have quite a few loop devices in your iostat output, find out what they are exactly:

$ df -kh /dev/loop*

[15p] Task B - Monitoring the behaviour with Iotop

Debian/Ubuntu Linux install iotop $ sudo apt-get install iotop How to use iotop command $ sudo iotop OR $ iotop

Supported options by iotop command:

| Options | Description | |

| –version | show program’s version number and exit | |

| -h, –help | show this help message and exit | |

| -o, –only | only show processes or threads actually doing I/O | |

| -b, –batch | non-interactive mode | |

| -n NUM, –iter=NUM | number of iterations before ending [infinite] | |

| -d SEC, –delay=SEC | delay between iterations [1 second] | |

| -p PID, –pid=PID | processes/threads to monitor [all] | |

| -u USER, –user=USER | users to monitor [all] | |

| -P, –processes | only show processes, not all threads | |

| -a, –accumulated | show accumulated I/O instead of bandwidth | |

| -k, –kilobytes | use kilobytes instead of a human friendly unit | |

| -t, –time | add a timestamp on each line (implies –batch) | |

| -q, –quiet | suppress some lines of header (implies –batch) | |

- Run iotop (install it if you do not already have it) in a separate shell showing only processes or threads actually doing I/O.

- Inspect the script code (dummy.sh) to see what it does.

- Monitor the behaviour of the system with iotop while running the script.

- Identify the PID and PPID of the process running the dummy script and kill the process using command line from another shell (sending SIGINT signal to both parent & child processes).

Provide a screenshot in which it shows the iotop with only the active processes and one of them being the running script. Then another screenshot after you succeeded to kill it.

03. [30p] RAM disk

Linux allows you to use part of your RAM as a block device, viewing it as a hard disk partition. The advantage of using a RAM disk is the extremely low latency (even when compared to SSDs). The disadvantage is that all contents will be lost after a reboot.

- ramfs - cannot be limited in size and will continue to grow until you run out of RAM. Its size can not be determined precisely with tools like df. Instead, you have to estimate it by looking at the “cached” entry from free's output.

- tmpfs - newer than ramfs. Can set a size limit. Behaves exactly like a hard disk partition but can't be monitored through conventional means (i.e. iostat). Size can be precisely estimated using df.

[15p] Task A - Create RAM Disk

Before getting started, let's find out the file system that our root partition uses. Run the following command (T - print file system type, h - human readable):

$ df -Th

The result should look like this:

Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 1.1G 0 1.1G 0% /dev tmpfs tmpfs 214M 3.8M 210M 2% /run /dev/sda1 ext4 218G 4.1G 202G 2% / <- root partition tmpfs tmpfs 1.1G 252K 1.1G 1% /dev/shm tmpfs tmpfs 5.0M 4.0K 5.0M 1% /run/lock tmpfs tmpfs 1.1G 0 1.1G 0% /sys/fs/cgroup /dev/sda2 ext4 923M 73M 787M 9% /boot /dev/sda4 ext4 266G 62M 253G 1% /home

From the results, we will assume in the following commands that the file system is ext4. If it's not your case, just replace with what you have:

$ sudo mkdir /mnt/ramdisk $ sudo mount -t tmpfs -o size=1G ext4 /mnt/ramdisk

tmpfs /mnt/ramdisk tmpfs rw,nodev,nosuid,size=1G 0 0

That's it. We just created a 1Gb tmpfs ramdisk with an ext4 file system and mounted it at /mnt/ramdisk. Use df again to check this yourself.

[15p] Task B - Pipe View & RAM Disk

As we mentioned before, you can't get I/O statistics regarding tmpfs since it is not a real partition. One solution to this problem is using pv to monitor the progress of data transfer through a pipe. This is a valid approach only if we consider the disk I/O being the bottleneck.

Next, we will generate 512Mb of random data and place it in /mnt/ramdisk/file first and then in /home/student/file. The transfer is done using dd with 2048-byte blocks.

$ pv /dev/urandom | dd of=/mnt/ramdisk/rand bs=2048 count=$((512 * 1024 * 1024 / 2048)) $ pv /dev/urandom | dd of=/home/student/rand bs=2048 count=$((512 * 1024 * 1024 / 2048))

Look at the elapsed time and average transfer speed. What conclusion can you draw?

![]() Put one screenshot with the tmpfs partition in df output and one screenshot of both pv commands and write your conclusion.

Put one screenshot with the tmpfs partition in df output and one screenshot of both pv commands and write your conclusion.

04. [30p] GPU Monitoring

a. [0p] Clone Repository and Build Project

Clone the repository containing the tasks and change to this lab's task 04. Follow the instructions to install the dependencies and build the project from the README.md.

$ git clone https://github.com/cs-pub-ro/EP-labs.git $ cd EP-labs/lab_05/task_04

b. [10p] Run Project and Collect Measurements

To run the project, simply run the binary generated by the build step. This will render a scene with a sphere. Follow the instructions in the terminal and progressively increase the number of vertices. Upon exiting the simulation with Esc, two .csv files will be created. You will use these measurements to generate plots.

Also pay close attention to your RAM!

Increase vertices until you have less than 10 FPS for good results.

c. [10p] Generate Plot

We want to interpret the results recorded. In order to do this, we need to visually see them in a suggestive way. Plot the results in such a way that they are suggestive and easy to understand.

- One single plot for all results

- Left OY axis shows FPS as a continuous variable

- Right OY axis shows time spent per event in ms

- OX axis follows the time of the simulation without any time ticks

- OX axis has ticks showing the number of vertices for each event that happens

- Every event marked with ticks on the OX axis has one stacked bar chart made of two components:

- a. a bottom component showing time spent copying buffers

- b. a top component showing the rest without the time spent on copying buffers

d. [10p] Interpret Results

Explain the results you have plotted. Answer the following questions:

- Why does the FPS plot look like downwards stairs upon increasing the number of vertices?

- Why does the FPS decrease more initially and the stabilizes itself at a higher value?

- What takes more to compute: generating the vertices, or copying them in the VRAM?

- What is the correlation between the number of vertices and the time to copy the Vertex Buffer?

- Why is the program less responsive on a lower number of frames?

e. [10p] Bonus Dedicated GPU

Go back to step b. and rerun the binary and make it run on your dedicated GPU. Redo the plot with the new measurements. You do not need to answer the questions again.

https://gist.github.com/abenson/a5264836c4e6bf22c8c8415bb616204a

If you use AMD, you can use the DRI_PRIME=1 environment variable.

05. [10p] Feedback

Please take a minute to fill in the feedback form for this lab.

References

- These examples are from Darren Hoch’s Linux System and Performance Monitoring.