Lab 9. Unsupervised Learning. K-Means Clustering

What you will learn:

- How to perform cluster analysis

- How to find the optimal number of clusters

- How to identify appropriate features

- How to interpret results

Unsupervised Learning

What is Unsupervised Machine Learning and when it can be useful:

- Algorithms that can learn from observational data and make predictions

- In Unsupervised Machine Learning, the model has to make sense of the data, without answers to learn from

- Use case: You have a data set and you don’t know exactly what you are looking for. You are looking for patterns in the data.

The most common unsupervised learning method is cluster analysis, which is used for exploratory data analysis to find hidden patterns or groupings in data.

Clustering

Clustering is an important unsupervised learning problem and deals with finding a structure in a collection of unlabeled data. Clustering can uncover interesting groupings of people/things/behaviors such as (example):

- where do millionaires live

- stereotypes from demographic data

- what trends can be found nowadays in music/movies/etc.

For clustering we need to define a proximity measure for two data points: similarity measure S(xa, xb) or dissimilarity (distance) measure D(xa, xb).

There are various similarity measures which can be used. For points, the Euclidean Distance is described by the formula:

$ d(x, y) = \sqrt{\sum_{i=1}^{d} \left ( x_i - y_i \right )^2} $

where:

$ x = [x_1, x_2, ..., x_d] $

$ y = [y_1, y_2, ..., y_d] $

K-Means Clustering

In general, K-means is a heuristic algorithm that partitions a data set into K clusters by minimizing the sum of squared distance in each cluster (WCSS). K-means is often referred to as Lloyd’s algorithm.

The goal is to group together data into similar classes such that:

- intra-class similarity is high

- inter-class similarity is low

The algorithm

K-Means is a simple unsupervised learning algorithm using a fixed number of clusters (k):

- Random initialization of K centroids (k-means): For each cluster, a centroid (cluster center) is defined by random choice.

- Loop: For each iteration, data points are assigned to a cluster based on the distance from the centroid.

- The centroids are recalculated based on the average position of points within each cluster

- The loop is repeated until the centroids do not change anymore (stop condition)

There are some things to consider with k-Means Clustering:

- Choosing the “right” value of k: choosing the optimal number of clusters; try increasing k until you stop getting large reductions in squared error (distance from each point to their centroids)

- Avoiding local minima: the random choice of initial centroids can generate different results; run it a few times to be sure the results are good

- Labeling the clusters: K-Means does not assign a meaning to the clusters; it's up to you to examine the clusters

The following code generates a random array of points and performs K-Means Clustering.

import numpy as np import matplotlib.pyplot as plt from sklearn.cluster import KMeans from sklearn import metrics X = 10 * np.random.randn(100, 2) + 6 kmeans_model = KMeans(n_clusters=3) kmeans_model.fit(X) plt.scatter(X[:, 0], X[:, 1], c=kmeans_model.labels_, cmap='rainbow', label="points") plt.show()

Choosing the optimal number of clusters

Typically, we want to be able to understand the data, so we are looking for the lowest number of clusters. We also want enough detail in the clustering so that we can find the most relevant patterns.

We now define the following measures to evaluate the clusters:

- Distortion: the average of the Euclidean squared distance from the centroid of the respective clusters.

- Inertia: the sum of squared distances of samples to their closest cluster center.

WCSS (Within Cluster Sum of Squares)

WCSS (inertia) is the sum of squares of the (Euclidean) distance of each data point to the cluster it was assigned to. This measure can be used in the K-Means clustering algorithm to evaluate the optimal number of clusters. A cluster that has a small WCSS is more compact, and therefore “better” than a cluster that has a large WCSS.

$ WCSS(k) = \sum_{j=1}^{k} \sum_{i=1}^{n} \left \| x_i - \bar{x_j} \right \|^2 $

where:

- $k$ = the number of clusters

- $n$ = the number of data points

- $x_i$ = data point $i$

- $\bar{x_j}$ = cluster centroid $j$

The WCSS (inertia) is already provided in the result.

inertia = kmeans_model.inertia_ print(inertia)

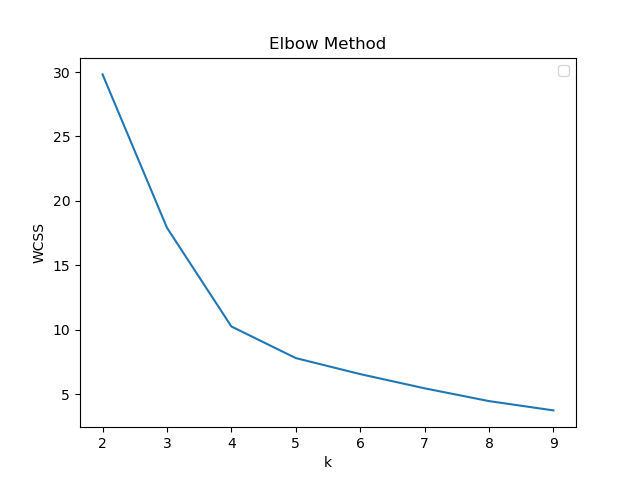

The Elbow Method

Below is a plot of sum of squared distances (WCSS). If the plot looks like an arm, then the elbow on the arm is optimal k. In this example, the optimal number of clusters is 4.

The Silhouette Coefficient

The Silhouette Coefficient is a measure of the similarity of a point with the points of the same cluster, and its dissimilarity with the points of other clusters. Selecting the number of clusters can be done with silhouette analysis for K-Means clustering.

This measure has a range of [-1, 1].

- +1 – the sample is far away from the neighboring clusters

- 0 – the sample is very close to the boundary between two clusters

- -1 – the sample is assigned to the wrong cluster

The Silhouette Score is calculated using the scikit-learn provided function silhouette_score.

s = metrics.silhouette_score(X, kmeans_model.labels_, metric='euclidean') print(s)

The clustering evaluation using both Elbow Method and Silhouette Coefficient is shown below. In this example, the optimal number of clusters is 4, as shown by both methods (looks like an arm, has the highest silhouette coefficient, k=4).

Case Study

The following case studies represent possible applications for K-Means clustering. The exercises in this lab are based on these examples.

Countries and continents

In this case study, we have a dataset with all the countries in the world, their location (latitude, longitude) and the continent they belong to. This looks like a clustering problem. Let's say we don't know the continents and we want to find them using clustering. The algorithm has to find out which are the continents based on the data about countries and their location.

Maybe we want to define the world other than using continents: let's say 3 clusters. No problem, clustering does this for us. The results are shown in the figure below:

Here is the relevant code for clustering in this scenario:

import csv import numpy as np import pandas as pd import matplotlib.pyplot as plt from sklearn.cluster import KMeans # read the csv dataset data = pd.read_csv('countries_continents.csv', encoding='latin-1') X, head = data.values, data.columns.values print("continents: ") continents = {e: i for i, e in enumerate(np.unique(X[:, 3]))} print(continents) # map data to numbers X[:, 3] = np.vectorize(continents.__getitem__)(X[:, 3]) X = X[:, 1: np.size(X, 1)-1] # run K-Means clustering algorithm kmeans = KMeans(n_clusters=3, init="k-means++") kmeans.fit(X) clusters = kmeans.predict(X) print("cluster labels: ") print(clusters) # show the assigned cluster centers (centroids) print("cluster centers") centroids = kmeans.cluster_centers_ print(centroids) # show the labels assigned for each data points print("cluster labels") print(kmeans.labels_)

Market segmentation

In this case study, we have a dataset with information about customers: age, amount spent, satisfaction, brand loyalty. We are interested in revealing some patterns in the customer behavior to be able to define a data-aware business strategy. Let's assume that we have customer satisfaction (CSAT) scores of 1 to 10 (self-reported discrete data, where 1 = very dissatisfied and 10 = very satisfied). And we have similar scores for the customer's level of brand loyalty (more tricky measure based on churn rate, retention rate, customer lifetime value/CLV, in the range of [-2.5, 2.5]).

In this case, we have two measures with different ranges ([1, 10], [-2.5, 2.5]). If we want to obtain good results we want to normalize the data before running the clustering algorithm:

import csv import pandas as pd from sklearn import preprocessing # read the csv dataset data = pd.read_csv('market_segmentation_data.csv', encoding='latin-1') X, head = data.values, data.columns.values # normalize the data X = preprocessing.scale(X)

The chart can be divided into 4 squares based on the measured level of satisfaction and brand loyalty:

- low satisfaction, low loyalty

- high satisfaction, high loyalty

- low satisfaction, high loyalty group

- high satisfaction, low loyalty group

Let's first take 2 clusters. These should reveal the extremes in the customer behaviors. Now it's our job to interpret the results. In this case, the results show the two extreme behaviors:

- the low satisfaction, low loyalty customers

- the high satisfaction, high loyalty customers

Now, as we've defined the 4 squares, let's take 4 clusters to represent a more useful pattern for business purpose. The results are shown in the chart below:

The 4 clusters reveal a more clear representation of customer behaviors which actually fit the 4 squares defined before:

- low satisfaction, low loyalty group (let's call them “alienated” customers)

- high satisfaction, high loyalty group (let's call them “fans”)

- low satisfaction, high loyalty group (let's call them “supporters”)

- high satisfaction, low loyalty group (let's call them “roamers”)

Great! Now we can define business strategies based on the actual customer behavior patterns, and turn those supporters and roamers into fans.

Exercises

Setup

Download the Project Archive and install the required packages via requirements.txt

Task 0 (2p). Random Dataset

Run task0.py:

- The script generates a random dataset of 100 rows and 2 columns. These are basically points in 2D space (x, y).

- We want to find out how these points can be assigned to clusters using the K-Means algorithm.

- The K-Means algorithm is found in clustering.py via clustering_kmeans(X, k), which uses the KMeans class from scikit-learn.

- The WCSS and Silhouette Score are calculated using the Euclidean distance and the silhouette_score function from scikit-learn

- The data points and cluster centroids are shown on a scatter plot (2D).

Task: Change the number of clusters in task0.py and run the script for each case. Report the results as plots.

Run task0_test.py:

- The script generates a random dataset and assigns a variable number of clusters: range(2, 20).

- For each selection, the clustering algorithm is evaluated with the WCSS and Silhouette Score.

- The optimal number of clusters is determined based on the Silhouette Score.

- The results are shown on a plot (WCSS, Silhouette Score) for each k (number of clusters)

Task: What is the optimal number of clusters? Present the results as a plot and number of clusters.

Task 1 (4p). Countries and Continents

Run task1.py:

- The script loads the dataset from a CSV file which contains data about countries and continents.

- We want to find out how these countries can be assigned to clusters using the K-Means algorithm.

- The names of the continents are converted to numbers to run the clustering.

- The script clusters the data using a defined number of clusters.

- The data points and cluster centroids are shown on a scatter plot (2D).

Task: Change the number of clusters in task1.py and report the results as plots.

Run task1_test.py:

- The script loads the dataset about countries and continents and performs clustering as in task1.py, with a variable number of clusters: range(2, 20).

- For each selection, the clustering algorithm is evaluated with the WCSS and Silhouette Score.

- The optimal number of clusters is determined based on the Silhouette Score.

- The results are shown on a plot (WCSS, Silhouette Score) for each k (number of clusters)

Task: What is the optimal number of clusters? Present the results as a plot and number of clusters.

Task 2 (4p). Market Segmentation

Run task2.py:

- The script loads the dataset from a CSV file which contains the data about customer behavior in the Market Segmentation case study.

- We want to find out how these behaviors can be assigned to clusters using the K-Means algorithm.

- The data now contains data of different ranges, which have to be scaled to be able to obtain good results with the clustering algorithm.

- The script runs the clustering algorithm and plots the data points and cluster centroids on a scatter plot (2D).

Task: Change the number of clusters in task2.py and run the script for each case. Report the results as plots.

Write task2_test.py:

- The script has to load the customer behavior dataset.

- The idea is similar to task1_test.py

- The range of clusters is defined as range(2, 10). For each number of clusters, the clustering algorithm is run and the WCSS and Silhouette Scores are saved into a list.

- The optimal number of clusters is evaluated using the Silhouette Score

- The results are shown on a plot (WCSS, Silhouette Score) for each k (number of clusters)

Task: What is the optimal number of clusters? Present the results as a plot and number of clusters.