Lab 03 - CPU Monitoring

Objectives

- Offer an introduction to Performance Monitoring

- Present the main CPU metrics and how to interpret them

- Get you to use various tools for monitoring the performance of the CPU

- Familiarize you with the x86 Hardware Performance Counters

Contents

Proof of Work

Before you start, create a Google Doc. Here, you will add screenshots / code snippets / comments for each exercise. Whatever you decide to include, it must prove that you managed to solve the given task (so don't show just the output, but how you obtained it and what conclusion can be drawn from it). If you decide to complete the feedback for bonus points, include a screenshot with the form submission confirmation, but not with its contents.

When done, export the document as a pdf and upload in the appropriate assignment on moodle. The deadline is 23:55 on Friday.

Introduction

Tasks

The skeleton for this lab can be found in this repository. Clone it locally before you start.

01. [30p] Vmstat

The vmstat utility provides a good low-overhead view of system performance. Since vmstat is such a low-overhead tool, it is practical to have it running even on heavily loaded servers when it is needed to monitor the system’s health.

[10p] Task A - Monitoring stress

Run vmstat on your machine with a 1 second delay between updates. Notice the CPU utilisation (info about the output columns here).

In another terminal, use the stress command to start N CPU workers, where N is the number of cores on your system. Do not pass the number directly. Instead, use command substitution.

Note: if you are trying to solve the lab on fep and you don't have stress installed, try cloning and compiling stress-ng.

[10p] Task B - How does it work?

Let us look at how vmstat works under the hood. We can assume that all these statistics (memory, swap, etc.) can not be normally gathered in userspace. So how does vmstat get these values from the kernel? Or rather, how does any process interact with the kernel? Most obvious answer: system calls.

$ strace vmstat

“All well and good. But what am I looking at?”

What you should be looking at are the system calls after the two writes that display the output header (hint: it has to do with /proc/ file system). So, what are these files that vmstat opens?

$ file /proc/meminfo $ cat /proc/meminfo $ man 5 proc

The manual should contain enough information about what these kernel interfaces can provide. However, if you are interested in how the kernel generates the statistics in /proc/meminfo (for example), a good place to start would be meminfo.c (but first, SO2 wiki).

[10p] Task C - USO flashbacks (1)

Write a one-liner that uses vmstat to report complete disk statistics and sort the output in descending order based on total reads column.

tail -n +3.

02. [30p] Mpstat

[10p] Task A - Python recursion depth

Try to run the script while passing 1000 as a command line argument. Why does it crash?

Luckily, python allows you to both retrieve the current recursion limit and set a new value for it. Increase the recursion limit so that the process will never crash, regardless of input (assume that it still has a reasonable upper bound).

[10p] Task B - CPU affinity

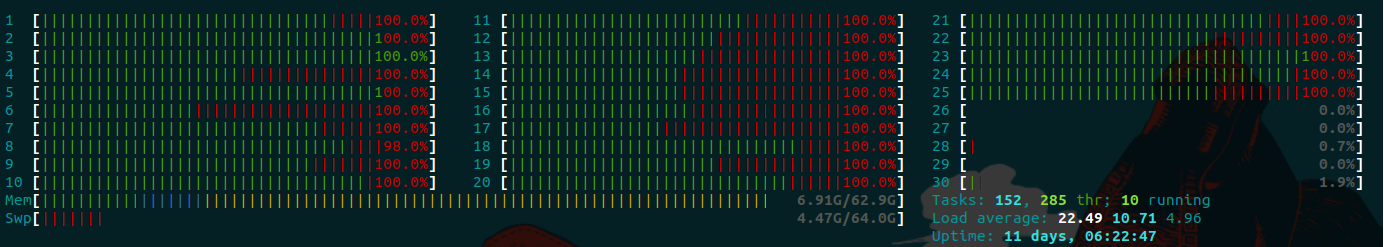

Run the script again, this time passing 10000. Use mpstat to monitor the load on each individual CPU at 1s intervals. The one with close to 100% load will be the one running our script. Note that the process might be passed around from one core to another.

Stop the process. Use stress to create N-1 CPU workers, where N is the number of cores on your system. Use taskset to set the CPU affinity of the N-1 workers to CPUs 1-(N-1) and then run the script again. You should notice that the process is scheduled on cpu0.

Note: to get the best performance when running a process, make sure that it stays on the same core for as long as possible. Don't let the scheduler decide this for you, if you can help it. Allowing it to bounce your process between cores can drastically impact the efficient use of the cache and the TLB. This holds especially true when you are working with servers rather than your personal PCs. While the problem may not manifest on a system with only 4 cores, you can't guarantee that it also won't manifest on one with 40 cores. When running several experiments in parallel, aim for something like this:

[10p] Task C - USO flashbacks (2)

Write a bash command that binds CPU stress workers on your odd-numbered cores (i.e.: 1,3,5,…). The list of cores and the number of stress workers must NOT be hardcoded, but constructed based on nproc (or whatever else you fancy).

In your submission, include both the bash command and a mpstat capture to prove that the command is working.

03. [15p] Zip with compression levels

The zip command is used for compression and file packaging under Linux/Unix operating system. It provides 10 levels of compression, where:

- level 0 : provides no compression, only packaging

- level 6 : used as default compression level

- level 9 : provides maximum compression

$ zip -5 file.zip file.txt

[10p] Task A - Measurements

Write a script to measure the compression rate and the time required for each level. You have a few large files in the code skeleton but feel free to add more. If you do add new files, make sure that they are not random data!

[5p] Task B - Plot

Generate a plot illustrating the compression rate, size decrease, etc. as a function of zip compression level. Make sure that your plot is understandable (i.e., has labels, a legend, etc.) Make sure to average multiple measurements for each compression level.

04. [25p] Microcode analysis

llvm-mca is a machine code analyzer that simulates the execution of a sequence of instructions. By leveraging high-level knowledge of the micro-architectural implementation of the CPU, as well as its execution pipeline, this tool is able to determine the execution speed of said instructions in terms of clock cycles. More importantly though, it can highlight possible contentions of two or more instructions over CPU resources or rather, its ports.

Note that llvm-mca is not the most reliable tool when predicting the precise runtime of an instruction block (see this paper for details). After all, CPUs are not as simple as the good old AVR microcontrollers. While calculating the execution time of an AVR linear program (i.e.: no conditional loops) is as simple as adding up the clock cycles associated to each instruction (from the reference manual), things are never that clear-cut when it comes to CPUs. CPU manufacturers such as Intel often times implement hardware optimizations that are not documented or even publicized. For example, we know that the CPU caches instructions in case a loop is detected. If this is the case, then the instructions are dispatched once again form the buffer, thus avoiding extra instruction fetches. What happens though, if the size of the loop's contents exceeds this buffer size? Obviously, without knowing certain aspects such as this buffer size, not to mention anything about microcode or unknown hardware optimizations, it is impossible to give accurate estimates.

[5p] Task A - Preparing the input

As a simple example we will look at task_04/csum.c. This file contains the `csum_16b1c()` function that computes the 16-bit one's complement checksum used in the IP and TCP headers.

Since llvm-mca requires assembly code as input, we first need to translate the provided C code. Because the assembly parser it utilizes is the same as clang's, use it to compile the C program but stop after the LLVM generation and optimization stages, when the target-specific assembly code is emitted.

$ clang -S -masm=intel csum.c # output = csum.s

LLVM-MCA-BEGIN and LLVM-MCA-END markers can be parsed (as assembly comments) in order to restrict the scope of the analysis.

These markers can also be placed in C code (see gcc extended asm and llvm inline asm expressions):

asm volatile("# LLVM-MCA-BEGIN" ::: "memory");

Remember, however, that this approach is not always desirable, for two reasons:

- Even though this is just a comment, the

volatilequalifier can pessimize optimization passes. As a result, the generated code may not correspond to what would normally be emitted. - Some code structures can not be included in the analysis region. For example, if you want to include the contents of a

forloop, doing so by injecting assembly meta comments in C code will exclude the iterator increment and condition check (which are also executed on every iteration).

[10p] Task B - Analyzing the assembly code

Use llvm-mca to inspect its expected throughput and “pressure points” (check out this example).

One important thing to remember is that llvm-mca does not simulate the behavior of each instruction, but only the time required for it to execute. In other words, if you load an immediate value in a register via mov rax, 0x1234, the analyzer will not care what the instruction does (or what the value of rax even is), but how long it takes the CPU to do it. The implication is quite significant: llvm-mca is incapable of analyzing complex sequences of code that contain conditional structures, such as for loops or function calls. Instead, given the sequence of instructions, it will pass through each of them one by one, ignoring their intended effect: conditional jump instructions will fall through, call instructions will by passed over not even considering the cost of the associated ret, etc. The closest we can come to analyzing a loop is by reducing the analysis scope via the aforementioned LLVM-MCA-* markers and controlling the number of simulated iterations from the command line.

To solve this issue, you can set the number of iterations from the command line, so its behavior can resemble an actual loop.

A very short description of each port's main usage:

- Port 0,1: arithmetic instructions

- Port 2,3: load operations, AGU (address generation unit)

- Port 4: store operations, AGU

- Port 5: vector operations

- Port 6: integer and branch operations

- Port 7: AGU

The the significance of the SKL ports reported by llvm-mca can be found in the Skylake machine model config. To find out if your CPU belongs to this category, RTFS and run an inxi -Cx.

#uOps) associated to each instruction. These are the number of primitive operations that each instruction (from the x86 ISA) is broken into. Fun and irrelevant fact: the hardware implementation of certain instructions can be modified via microcode upgrades.

Anyway, keeping in mind this #uOps value (for each instruction), we'll notice that the sum of all resource pressures per port will equal that value. In other words resource pressure means the average number of micro-operations that depend on that resource.

[10p] Task C - In-depth examination

Now that you've got the hang of things, use the -bottleneck-analysis flag to identify contentious instruction sequences.

Explain the reason to the best of your abilities. For example, the following two instructions display a register dependency because the mov instruction needs to wait for the push instruction to update the RSP register.

0. push rbp ## REGISTER dependency: rsp 1. mov rbp, rsp ## REGISTER dependency: rsp

How would you go about further optimizing this code?

05. [10p] Bonus - Hardware Counters

A significant portion of the system statistics that can be generated involve hardware counters. As the name implies, these are special registers that count the number of occurrences of specific events in the CPU. These counters are implemented through Model Specific Registers (MSR), control registers used by developers for debugging, tracing, monitoring, etc. Since these registers may be subject to changes from one iteration of a microarchitecture to the next, we will need to consult chapters 18 and 19 from Intel 64 and IA-32 Architectures Developer's Manual: Vol. 3B.

The instructions that are used to interact with these counters are RDMSR, WRMSR and RDPMC. Normally, these are considered privileged instructions (that can be executed only in ring0, aka. kernel space). As a result, acquiring this information from ring3 (user space) requires a context switch into ring0, which we all know to be a costly operation. The objective of this exercise is to prove that this is not necessarily the case and that it is possible to configure and examine these counters from ring3 in as few as a couple of clock cycles.

Before getting started, one thing to note is that there are two types of performance counters:

- Fixed Function Counters

- each can monitor a single, distinct and predetermined event (burned in hardware)

- are configured a bit differently than the other type

- are not of interest to us in this laboratory

- General Purpose Counters

- can be configured to monitor a specific event from a list of over 200 (see chapters 19.1 and 19.2)

Here is an overview of the following five tasks:

- Task A: check the version ID of your CPU to determine what it's capable of monitoring.

- Task B: set a certain bit in CR4 to enable ring3 usage of the RDPMC instruction.

- Task C: use some ring3 tools to enable the hardware counters.

- Task D: start counting L2 cache misses.

- Task E: use RDPMC to measure the cache misses for a familiar program.

Task A - Hardware info

First of all, we need to know what we are working with. Namely, the microarchitecture version ID and the number of counters per core. To this end, we will use cpuid (basically a wrapper over the CPUID instruction.) All the information that we need will be contained in the 0AH leaf (might want to get the raw output of cpuid):

- CPUID.0AH:EAX[15:8] : number of general purpose counters

- CPUID.0AH:EAX[7:0] : version ID

- CPUID.0AH:EDX[7:0] : number of fixed function counters

Note: the first two columns of the output represent the EAX and ECX registers used when calling CPUID. If the most significant bit in EAX is 1 (i.e.: starts with 0x8) the output is for extended options. ECX is a relatively new addition. So when looking for the 0AH leaf, search for a line starting with 0x0000000a. The register contents following ':' represent the output of the instruction.

Point out to your assistant which is which in the cpuid output.

Task B - Unlock RDPMC in ring3

Due to security considerations, reading the Performance Monitor Counters from userspace is normally not allowed. This is enforced at a hardware level via the Performance-Monitor Counter Enable bit in CR4.

Under normal circumstances, modifying Control Registers from userspace is not possible and you would have to write a kernel module for this. However, the perf_event_open() man page documents a sysfs interface (i.e., /sys/bus/event_source/devices/cpu/rdpmc) that does this for us.

Use the sysfs interface to revert the RDPMC access behavior to the pre-4.0 version.

Task C - Configure IA32_PERF_GLOBAL_CTRL

The IA32_PERF_GLOBAL_CTRL (0x38f) MSR is an addition from version 2 that allows enabling / disabling multiple counters with a single WRMSR instruction. What happens, in layman terms, is that the CPU performs an AND between each EANBLE bit in this register and its counterpart in the counter's original configuration register from version 1 (which we will deal with in the next task.) If the result is 1, the counter begins to register the programmed event every clock cycle. Normally, all these bits should be set by default during the booting process but it never hurts to check. Also, note that this register exists for each logical core.

If for CR4 we had to write a kernel module, for MSRs we have user space tools that take care of this for us (rdmsr and wrmsr) by interacting with a driver called msr (install msr-tools if it's missing from your system.) But first, we must load this driver.

$ lsmod | grep msr $ sudo modprobe msr $ lsmod | grep msr msr 16384 0

Next, let us read the value in the IA32_PERF_GLOBAL_CTRL register. If the result differs from what you see in the snippet below, overwrite the value (the -a flag specifies that we want the command to run on each individual logical core).

$ sudo rdmsr -a 0x38f 70000000f $ sudo wrmsr -a 0x38f 0x70000000f

Task D - Configure IA32_PERFEVENTSELx

The IA32_PERFEVENTSELx are MSRs from version 1 that are used to configure the monitored event of a certain counter, its enabled state and a few other things. We will not go into detail and instead only mention the fields that interest us right now (you can read about the rest in the Intel manual.) Note that the x in the MSR's name stands for the counter number. If we have 4 counters, it takes values in the 0:3 range. The one that we will configure is IA32_PERFEVENTSEL0 (0x186). If you want to configure more than one counter, note that they have consecutive register number (i.e. 0x187, 0x188, etc.).

As for the register flags, those that are not mentioned in the following list should be left cleared:

- EN (enable flag) = 1 starts the counter

- USR (user mode flag) = 1 monitors only ring3 events

- UMASK (unit mask) = ?? depends on the monitored event (see chapter 19.2)

- EVSEL (event select) = ?? depends on the monitored event (see chapter 19.2)

Before actually writing in this register, we should verify that no one is currently using it. If this is indeed the case, we might also want to clear IA32_PMC0 (0xc1). PMC0 is the actual counter that is associated to PERFEVENTSEL0.

$ sudo rdmsr -a 0x186 0 $ sudo wrmsr -a 0xc1 0x00 $ sudo wrmsr -a 0x186 0x41????

For the next (and final task) we are going to monitor the number of L2 cache misses. Look for the L2_RQSTS.MISS event in table 19-3 or 19-11 (depending on CPU version id) in the Intel manual and set the last two bytes (the unit mask and event select) accordingly. If the operation is successful and the counters have started, you should start seeing non-zero values in the PMC0 register, increasing in subsequent reads.

/proc/cpuinfo and identify your microarchitecture based on the table below. Then search for the desired event in the appropriate section of the site.

| Generation | Microarchitecture (Core Codename) | Release Year | Typical CPU Numbers |

|---|---|---|---|

| 1st | Nehalem / Westmere | 2008–2010 | i3,5,7 3xx–9xx |

| 2nd | Sandy Bridge | 2011 | i3,5,7 2xxx |

| 3rd | Ivy Bridge | 2012 | i3,5,7 3xxx |

| 4th | Haswell | 2013 | i3,5,7 4xxx |

| 5th | Broadwell | 2014–2015 | i3,5,7 5xxx |

| 6th | Skylake | 2015 | i3,5,7 6xxx |

| 7th | Kaby Lake | 2016–2017 | i3,5,7 7xxx |

| 8th | Coffee Lake / Amber Lake / Whiskey Lake | 2017–2018 | i3,5,7 8xxx |

| 9th | Coffee Lake Refresh | 2018–2019 | i3,5,7,9 9xxx |

| 10th | Comet Lake / Ice Lake / Tiger Lake | 2019–2020 | i3,5,7,9 10xxx |

| 11th | Rocket Lake / Tiger Lake | 2021 | i3,5,7,9 11xxx |

| 12th | Alder Lake | 2021–2022 | i3,5,7,9 12xxx |

| 13th | Raptor Lake | 2022–2023 | i3,5,7,9 13xxx |

| 14th | Raptor Lake Refresh | 2023–2024 | i3,5,7,9 14xxx |

| — | Meteor Lake | 2023–2024 | Core Ultra 5,7,9 1xx |

| — | Arrow Lake / Lunar Lake | 2024–2025 | Core Ultra 5,7,9 2xx |

Task E - Ring3 cache performance evaluation

As of now, we should be able to modify the CR4 register with the kernel module, enable all counters in the IA32_PERF_GLOBAL_CTRL across all cores and start an L2 cache miss counter again, across all cores. What remains is putting everything into practice.

Take mat_mul.c. This program may be familiar from an ASC laboratory but, in case it isn't, the gist of it is that when using the naive matrix multiplication algorithm (O(n^3)), the frequency with which each iterator varies can wildly affect the performance of the program. The reason behind this is (in)efficient use of the CPU cache. Take a look at the following snippet from the source and keep in mind that each matrix buffer is a continuous area in memory.

for (uint32_t i=0; i<N; ++i) /* line */ for (uint32_t j=0; j<N; ++j) /* column */ for (uint32_t k=0; k<N; ++k) r[i*N + j] += m1[i*N + k] * m2[k*N + j];

What is the problem here? The problem is that i and k are multiplied with a large number N when updating a certain element. Thus, fast variations in these two indices will cause huge strides in accessed memory areas (larger than a cache line) and will cause unnecessary cache misses. So what are the best and worst configurations for the three fors? The best: i, k j. The worst: j, k, i. As we can see, the configurations that we will monitor in mat_mul.c do not coincide with the aforementioned two (so… not great, not terrible.) Even so, the difference in execution time and number of cache misses will still be significant.

Which brings us to the task at hand: using the RDPMC instruction, calculate the number of L2 cache misses for each of the two multiplications without performing any context switches (hint: look at gcc extended asm and the following macro from mat_mul.c).

#define rdpmc(ecx, eax, edx) \

asm volatile ( \

"rdpmc" \

: "=a"(eax), \

"=d"(edx) \

: "c"(ecx))

A word of caution: remember that each logical core has its own PMC0 counter, so make sure to use taskset in order to set the CPU affinity of the process. If you don't the process may be passed around different cores and the counter value becomes unreliable.

$ taskset 0x01 ./mat_mul 1024

06. [10p] Feedback

Please take a minute to fill in the feedback form for this lab.