Lab 01 - CPU Monitoring (Linux)

Objectives

- Offer an introduction to Performance Monitoring

- Present the main CPU metrics and how to interpret them

- Get you to use various tools for monitoring the performance of the CPU

- Familiarize you with the x86 Hardware Performance Counters

Contents

Proof of Work

Before you start, create a Google Doc. Here, you will add screenshots / code snippets / comments for each exercise. Whatever you decide to include, it must prove that you managed to solve the given task (so don't show just the output, but how you obtained it and what conclusion can be drawn from it). If you decide to complete the feedback for bonus points, include a screenshot with the form submission confirmation, but not with its contents.

When done, export the document as a pdf and upload in the appropriate assignment on moodle. The deadline is 23:55 on Friday.

Introduction

Performance Monitoring is the process of checking a set of metrics in order to ascertain the health of the system. Normally, the information gleaned from these metrics is in turn used to fine tune the system in order to maximize its performance. As you may imagine, both acquiring and interpreting this data requires at least some knowledge of the underlying operating system.

In the following four labs, we'll discuss the four main subsystems that are likely to have an impact either on a single process, or on the system as a whole. These are: CPU, memory, disk I/O and networking. Note that these subsystems are not independent of one another. For example, a web application may be dependent on the network stack of the kernel. Its implementation determines the amount of packets processed in a given amount of time. However, protocols that require checksum calculation (e.g.: TCP) will want to use a highly optimized implementation of this function (which is written directly in assembly). If your architecture does not have such an implementation and falls back to using the one written in C, you may prefer changing your choice of protocol.

When dealing strictly with the CPU, these are a few things to look out for:

Context Switches

A context switch is a transition from one runtime environment to another. One example would be performing a privileged call to kernel space via a system call, then returning from it. When this happens, a copy of your register state must be stored, for obvious reasons. This operation takes some time

This usually comes in the form of performing a privileged call to kernel space (e.g.: syscall) and returning from it. Whenever this happens, a copy of your register state must be (re)stored, which takes up some time.

Note, however, how each individual process has its own address space, but in every address space, the only constant is the kernel. Why is that? Well, when the time slice of a process runs out and another is scheduled in, the kernel must perform a Translation Lookaside Buffer (TLB) flush. Otherwise, memory accesses in the new process might erroneously end up targeting the memory of the previous process. Yes, some shared objects (libraries) could have been mapped at the same virtual addresses and deleting those entries from the TLB is a shame, but there's no workaround for that. Now, back to our original question: why is the kernel mapped identically in each virtual address space? The reason is that when you perform a context switch into the kernel after calling open() or read(), a TLB flush is not necessary. If you wanted to write your own kernel, you could theoretically isolate the kernel's address space (like any other process), but you would see a huge performance drop.

The takeaway is that some context switches are more expensive than others. Not being able to schedule a process to a single core 100% of the time comes with a huge cost (flushing the TLB). This being said, context switches from user space to kernel space are still expensive operations. As Terry Davis once demonstrated in his Temple OS, running everything at the same privilege level can reduce the cost of context switches by orders of magnitude.

CPU Utilization

Each process is given a time slice for it to utilize however it sees fit. The way that time is utilized can prove to be a meaningful metric. There are two ways that we can look at this data: system level or process level.

At system level, the data is offered by the kernel in /proc/stats (details in man 5 proc; look for this file). For each core, we get the amount of time units (USER_HZ configured at compile time in the kernel ~= 10ms) each core has spent on a certain type of task. The more commonly encountered are of course:

- user: Running unprivileged code in ring3.

- system: Running privileged code in ring0.

- idle: Not running anything. In this case, the core voltage & frequency is usually reduced.

- nice: Same as user, but refers to processes with a nice > 0 personality. More details here.

The less reliable / relevant ones are:

- iowait: Time waiting for I/O. Not reliable because this is usually done via Direct Memory Access at kernel level and processes that perform blocking I/O operations (e.g.:

read()– with the exception of certain types of files, such as sockets, opened withO_NONBLOCK) automatically yield their remaining time for another CPU bound process to be rescheduled. - (soft)irq: Time servicing interrupts. This has nothing to do with user space processes. A high number can indicate high peripheral activity.

- steal: If the current system runs under a Hypervisor (i.e.: you are running in a Virtual Machine), know that the HV has every right to steal clock cycles from any VM in order to satisfy its own goals. Just like the kernel can steal clock cycles from a regular process to service an interrupt from, let's say, the Network Interface Controller, so can the HV steal clock cycles from the VM for exactly the same purpose.

- guest: The opposite of steal. If you are running a VM, then the kernel can take the role of a HV in some capacity (see kvm). This is the amount of time the CPU was used to run the guest VM.

At process level, the data can be found in /proc/[pid]/stat (see man 5 proc). Note that in this case, the amount of information the kernel interface provides is much more varied. While we still have utime (user time) and stime (system time), note that we also have statistics for child processes that have not been orphaned: cutime, cstime.

Although you may find many tools that offer similar information, remember that these files are the origin. Another thing to keep in mind is that this data is representative for the entire session, i.e.: from system boot or from process launch. If you want to interpret it in a meaningful manner, you need to get two data points and know the time interval between their acquisition.

Scheduling

When a CPU frees up, the kernel must decide which process gets to run next. To this end, it uses the Completely Fair Scheduler (CFS). Normally, we don't question the validity of the scheduler's design. That's a few levels above our paygrade. What we can do, is adjust the value of /proc/sys/kernel/sched_min_granularity_ns. This virtual file contains the minimum amount of nanoseconds that a task is allocated when scheduled on the CPU. A lower value guarantees that each process will be scheduled sooner rather than later, which is a good trait of a real-time system (e.g.: Android – you don't want unresponsive menus). A greater value, however, is better when you are doing batch processing (e.g.: rendering a video). We noted previously that switching active processes on a CPU core is an expensive operation. Thus, allowing each process to run for longer will reduce the CPU dead time in the long run.

Another aspect that's not necessarily as talked about is core scheduling. Given that you have more available cores than active tasks, on what core do you schedule a task? When answering this question, we need to keep in mind a few things: the CPU does not operate at a constant frequency. The voltage of each core, and consequently its frequency, varies based on the amount of active time. That being said, if a core has been idle for quite some time and suddenly a new task is scheduled, it will take some time to get it from its low-power frequency to its maximum. But now consider this: what if the workload is not distributed among all cores and a small subset of cores overheats? The CPU is designed to forcibly reduce the frequency is such cases, and if the overall temperature exceeds a certain point, shut down entirely.

At the moment, CFS likes to spread out the tasks to all cores. Of course, each process has the right to choose the cores it's comfortable to run on (more on this in the exercises section). Another reason why this may be preferable that we haven't mentioned before is not invalidating the CPU cache. L1 and L2 caches are specific to each physical core. L3 is accessible to all cores. However. L1 and L2 have an access time of 1-10ns, while L3 can go as high as 30ns. If you have some time, read a bit about Nest, a newly proposed scheduler that aims to keep scheduled tasks on “warm cores” until it becomes necessary to power up idle cores as well. Can you come up with situations when Nest may be better or worse than CFS?

Tasks

An archive containing all the files needed for the tasks can be found here: labep_01.zip

01. [30p] Vmstat

The vmstat utility provides a good low-overhead view of system performance. Since vmstat is such a low-overhead tool, it is practical to have it running even on heavily loaded servers when it is needed to monitor the system’s health.

[10p] Task A - Monitoring stress

Run vmstat on your machine with a 1 second delay between updates. Notice the CPU utilisation (info about the output columns here).

In another terminal, use the stress command to start N CPU workers, where N is the number of cores on your system. Do not pass the number directly. Instead, use command substitution.

Note: if you are trying to solve the lab on fep and you don't have stress installed, try cloning and compiling stress-ng.

[10p] Task B - How does it work?

Let us look at how vmstat works under the hood. We can assume that all these statistics (memory, swap, etc.) can not be normally gathered in userspace. So how does vmstat get these values from the kernel? Or rather, how does any process interact with the kernel? Most obvious answer: system calls.

$ strace vmstat

“All well and good. But what am I looking at?”

What you should be looking at are the system calls after the two writes that display the output header (hint: it has to do with /proc/ file system). So, what are these files that vmstat opens?

$ file /proc/meminfo $ cat /proc/meminfo $ man 5 proc

The manual should contain enough information about what these kernel interfaces can provide. However, if you are interested in how the kernel generates the statistics in /proc/meminfo (for example), a good place to start would be meminfo.c (but first, SO2 wiki).

[10p] Task C - USO flashbacks (1)

Write a one-liner that uses vmstat to report complete disk statistics and sort the output in descending order based on total reads column.

tail -n +3.

02. [30p] Mpstat

Open fact_rcrs.zip and look at the code.

[10p] Task A - Python recursion depth

Try to run the script while passing 1000 as a command line argument. Why does it crash?

Luckily, python allows you to both retrieve the current recursion limit and set a new value for it. Increase the recursion limit so that the process will never crash, regardless of input (assume that it still has a reasonable upper bound).

[10p] Task B - CPU affinity

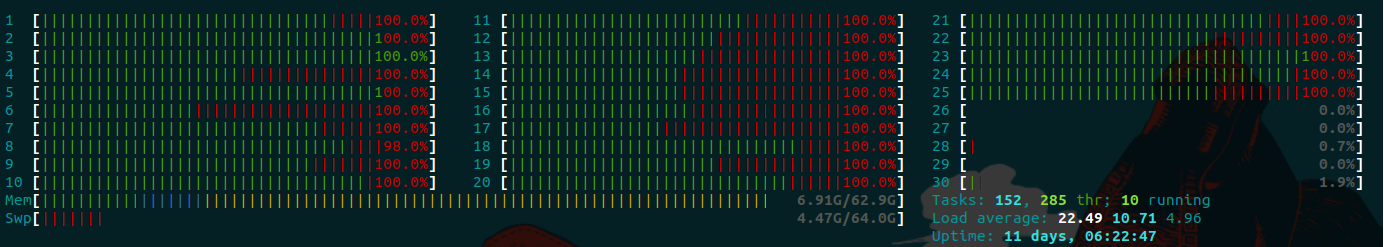

Run the script again, this time passing 10000. Use mpstat to monitor the load on each individual CPU at 1s intervals. The one with close to 100% load will be the one running our script. Note that the process might be passed around from one core to another.

Stop the process. Use stress to create N-1 CPU workers, where N is the number of cores on your system. Use taskset to set the CPU affinity of the N-1 workers to CPUs 1-(N-1) and then run the script again. You should notice that the process is scheduled on cpu0.

Note: to get the best performance when running a process, make sure that it stays on the same core for as long as possible. Don't let the scheduler decide this for you, if you can help it. Allowing it to bounce your process between cores can drastically impact the efficient use of the cache and the TLB. This holds especially true when you are working with servers rather than your personal PCs. While the problem may not manifest on a system with only 4 cores, you can't guarantee that it also won't manifest on one with 40 cores. When running several experiments in parallel, aim for something like this:

[10p] Task C - USO flashbacks (2)

Write a bash command that binds CPU stress workers on your odd-numbered cores (i.e.: 1,3,5,…). The list of cores and the number of stress workers must NOT be hardcoded, but constructed based on nproc (or whatever else you fancy).

In your submission, include both the bash command and a mpstat capture to prove that the command is working.

03. [15p] Zip with compression levels

The zip command is used for compression and file packaging under Linux/Unix operating system. It provides 10 levels of compression, where:

- level 0 : provides no compression, only packaging

- level 6 : used as default compression level

- level 9 : provides maximum compression

$ zip -5 file.zip file.txt

[10p] Task A - Measurements

Write a script to measure the compression rate and the time required for each level. Use the following files:

[5p] Task B - Plot

Fill the data you obtained into the python3 script in plot.zip.

Make sure you have python3 and python3-matplotlib installed.

04. [25p] llvm-mca

llvm-mca is a machine code analyzer that simulates the execution of a sequence of instructions. By leveraging high-level knowledge of the micro-architectural implementation of the CPU, as well as its execution pipeline, this tool is able to determine the execution speed of said instructions in terms of clock cycles. More importantly though, it can highlight possible contentions of two or more instructions over CPU resources or rather, its ports.

Note that llvm-mca is not the most reliable tool when predicting the precise runtime of an instruction block (see this paper for details). After all, CPUs are not as simple as the good old AVR microcontrollers. While calculating the execution time of an AVR linear program (i.e.: no conditional loops) is as simple as adding up the clock cycles associated to each instruction (from the reference manual), things are never that clear-cut when it comes to CPUs. CPU manufacturers such as Intel often times implement hardware optimizations that are not documented or even publicized. For example, we know that the CPU caches instructions in case a loop is detected. If this is the case, then the instructions are dispatched once again form the buffer, thus avoiding extra instruction fetches. What happens though, if the size of the loop's contents exceeds this buffer size? Obviously, without knowing certain aspects such as this buffer size, not to mention anything about microcode or unknown hardware optimizations, it is impossible to give accurate estimates.

[5p] Task A - Preparing the input

As previosuly mentioned, llvm-mca requires assembly code as input so start by preparing it from the source provided in the archive.

Since llvm-mca requires assembly code as input, we first need to translate the C source provided in the archive. Because the assembly parser it utilizes is the same as clang's, use it to compile the C program but stop after the LLVM generation and optmization stages, when the target-specific assembly code is generated.

LLVM-MCA-BEGIN and LLVM-MCA-END markers can be parsed (as assembly comments) in order to restrict the scope of the analysis.

These markers can also be placed in C code (see gcc extended asm and llvm inline asm expressions):

asm volatile("# LLVM-MCA-BEGIN" ::: "memory");

Remember, however, that this approach is not always desirable, for two reasons:

- Even though this is just a comment, the

volatilemodifier can pessimize optimization passes. As a result, the generated code may not correspond to what would normally be emitted. - Some code structures can not be included in the analysis region. For example, if you want to include the contents of a

forloop, doing so by injecting assembly meta comments in C code will exclude the incrementation and condition check (which are also executed on every iteration).

[10p] Task B - Analyzing the assembly code

After disassembling the code use llvm-mca to inspect its expected throughput and “pressure points” (check out this example.

One important thing to remember is that llvm-mca does not simulate the behaviour of each instruction, but only the time required for it to execute. In other words, if you load an immediate value in a register via mov rax, 0x1234, the analyzer will not care what the instruction does (or what the value of rax even is), but how long it takes the CPU to do it. The implication is quite significant: llvm-mca is incapable of analyzing complex sequences of code that contain conditional structures, such as for loops or function calls. Instead, given the sequence of instructions, it will pass through each of them one by one, ignoring their intended effect: conditional jump instructions will fall through, call instructions will by passed over not even considering the cost of the associated ret, etc. The closest we can come to analyzing a loop is by reducing the analysis scope via the aforementioned LLVM-MCA-* markers and controlling the number of simulated iterations from the command line.

To solve this issue, you can set the number of iterations from the command line, so its behaviour can resemble an actual loop.

A very short description of each port's main usage:

- Port 0,1: arithmetic instructions

- Port 2,3: load operations, AGU (address generation unit)

- Port 4: store operations, AGU

- Port 5: vector operations

- Port 6: integer and branch operations

- Port 7: AGU

The the significance of the SKL ports reported by llvm-mca can be found in the Skylake machine model config. To find out if your CPU belongs to this category, RTFS and run an inxi -Cx.

#uOps) associated to each instruction. These are the number of primitive operations that each instruction (from the x86 ISA) is broken into. Fun and irrelevant fact: the hardware implementation of certain instructions can be modified via microcode upgrades.

Anyway, keeping in mind this #uOps value (for each instruction), we'll notice that the sum of all resource pressures per port will equal that value. In other words resource pressure means the average number of micro-operations that depend on that resource.

[10p] Task C - In-depth examination

Now that you've got the hang of things, try generating asm code with certain optimization levels (i.e.: O1,2,3,s, etc.)

Use the -bottleneck-analysis flag to identify contentious instruction sequences. Explain the reason to the best of your abilities.

05. [10p] Bonus - Hardware Counters

06. [10p] Feedback

Please take a minute to fill in the feedback form for this lab.