Table of Contents

Assignment

1. Context

In the last few years, the number of Internet users has seen unprecedented growth. Whether these users are human beings or machines (IoT devices, bots, other services, mobile clients etc.) they place a great burden on the systems they are requesting services from. As a consequence, the administrators of those systems had to adapt and come up with solutions for efficiently handling the increasing traffic. In this assignment, we will focus on one of them and that is load balancing.

A load balancer is a proxy meant to distribute traffic across a number of servers, usually called services. By adding a load balancer in a distributed system, the capacity and reliability of those services significantly increase. That is why in almost every modern cloud architecture there is at least one layer of load balancing.

2. Architecture

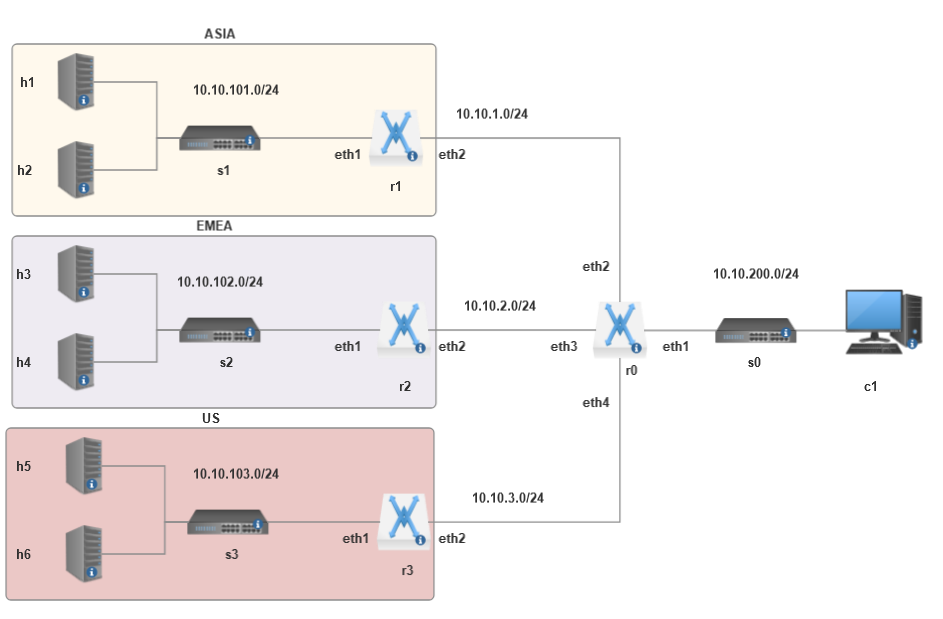

We propose a topology that should mimic a cloud system that acts as a global service, replicated across multiple machines/workers and regions, which must serve the clients as efficiently as possible. Supposing that all the requests are coming from users found in the same country, the latencies expected from the cloud regions differ. Moreover, the number of machines available on each region vary and all these aspects can have an impact on the overall performance of the system.

That is why, a dedicated proxy that decides where to route those requests coming from the clients had to be added into the system. Obviously, this proxy is our load balancer and in this particular scenario it is divided into 2 main components:

- A few routers, meant to forward the requests coming from the clients to an actual machine available on a certain region

- A command unit (c1) that is supposed to identify the number of requests that are about to hit our system and decide, based on this number, how to efficiently utilize the routing units.

You can have an overview on the proposed architecture by looking at the diagram below:

In this assignment, you will be focusing on doing a topology performance analysis and on the Command Unit logic, the other components of the system being already implemented.

3. Mininet Topology

Mininet is a network emulator which creates a network of virtual hosts, switches, controllers, and links. Mininet hosts run standard Linux network software, and its switches support OpenFlow for highly flexible custom routing and Software-Defined Networking.

The topology above was built using Mininet. In this manner, you will have to use the API that commands the servers and the client/command unit. The topology has three layers:

- First layer - the network between c1 and the first router r0 - 10.10.200.0/24

- Second layer - the networks between r0 and the region routers r1,r2,r3 - 10.10.x.0/24

- Third layer - the networks between region routers and the web servers - 10.10.10x.0/24

4. Environment

To set up your environemt you have a few options:

- You can use the official Mininet latest release VM, which you can get from here.

* Make a local setup using the steps from **Option 2: Native Installation from Source**

5. Implementation

First of all, you have to deploy the topology and measure its performance under different test cases and collect data to make an idea what are the limits of it.

Secondly, you have to work on the command unit by editing the client to implement some optimisations on the traffic flow of the topology. All components of the topology are written in Python 3. Having a number of requests N as input, try various strategies of calling the 3 regions servers available so that your clients experiment response times as low as possible. There are no constraints applied to how you read the number of requests, what Python library you use to call the forwarding unit or how you plot the results.

Because we are working with HTTP requests, the client in its current state is able to make a single request and print the result in a file (as you need to exclusively monitor the client in order to get outputs from it). You can build on that and modify it as you please to fit your needs.

However, we strongly suggest you work in a virtual environment where you install all your pip dependencies. By doing so, you will keep your global workspace free of useless packages and you can easily specify just those packages required to run your code:

pip freeze > requirements.txt

Please note that we will definitely apply penalties if the requirements.txt file contains packages that are not used. Always imagine that you are in a real production environment ![]() . You can find out more about virtual environments here.

. You can find out more about virtual environments here.

6. Objectives and Evaluation

I. (10p) Prerequisites

A. (3p) Environment setup

If you download the machine import it. Its credentials are:

Username: mininet

Password: mininet

If you chose a local setup make sure everything works as expected.

B. (7p) Run the topology

Clone the repo from above and check it by running:

$ sudo python3 topology.pyc -h usage: topology.py [-h] [-t] user positional arguments: user your moodle username optional arguments: -h, --help show this help message and exit -t, --test set it if you want to run tests

You will find that there are 2 arguments first is your moodle username, and the second one is an optional flag to run a test. What the test flag actually does is call the function inside test.py. Because we wanted to keep the topology link metrics hidden we had to make the topology a .pyc and give you the test as a means to create automated tests for the topo.

<code bash> $ sudo python3 topology.pyc sandu.popescu [...] No test run, starting cli *** Starting CLI: containernet>

Example - calling the test function from test.py and then run with the CLI:

$ sudo python3 topology.pyc sandu.popescu -t [...] Running base test with only one server Done *** Starting CLI: stopping h1 containernet>

The topology script will:

- Create routers, switches and hosts

- Add links between each node with custom metrics

- Add routing rules

- Run the test if available

- And then connect the CLI

Inside the test script, there is an example of the usage of the API to run commands on the host machines in an automated manner.

Alternatively, you can run commands from any node, specifying the node and then the command. For example, if you want to ping r1 router from c1 host you can run the following:

No test run, starting cli *** Starting CLI: containernet> c1 ping r1

(30p) II. Evaluation - System Limits Analysis

Before implementing your own solutions to make traffic more efficient, you should first analyze the limits of the system. You should find out the answer to questions such as the following:

- How many requests can be handled by a single machine?

- What is the latency of each region?

- What is the server path with the smallest response time? But the slowest?

- What is the path that has the greatest loss percentage?

- What is the latency introduced by the first router in our path?

- Is there any bottleneck in the topology? How would you solve this issue?

- What is your estimation regarding the latency introduced?

- What downsides do you see in the current architecture design?

Your observations should be written in the Performance Evaluation Report accompanied by relevant charts (if applicable).

(50p) III. Implementation

(30p) A. Solution

Find methods to optimize traffic. You have to come up with 3-5 methods to optimize it and test them on the command unit/client (you can write them as part of the client). Your solution should try various ways of calling the exposed endpoints of the topology depending on the number of requests your system must serve. For instance, if you only have 10 requests, you might get away by just calling a certain endpoint, but if this number increases, then you might want to try something more complex.

The number of requests your system should serve is not imposed, but you should definitely try a sufficiently large range of request batches in order to properly evaluate your policies. Choosing a relevant number is part of the task. ![]()

Response Object

Since the hosts are running an HTTP server script on the servers, you should expect HTTP responses or adapt your client for this type of traffic.

(20p) B. Efficient Policies Comparison

Compare your efficient policies for a relevant range of request batch sizes and write your observations in the Performance Evaluation Report file together with some relevant charts

(10p) IV. Documentation

You should write a high quality Performance Evaluation Report document which:

- should explain your implementation and evaluation strategies

- present the results

- can have a maximum of 3 pages

- should be readable, easy to understand and aesthetic

- on the first page it should contain the following:

- your name

- your group number

- which parts of the assignment were completed

- what grade do you consider that your assignment should receive

(10p) Bonus

Deploy your solution in a Docker image and make sure it can be run with runtime arguments representing the chosen method and number of requests your system should serve for the full topology(all servers up). The container you created should be able to communicate with the topology.

7. Assignment Upload

- the Python modules used in the implementation (mainly test.py and client.py or whatever other source files you used)

- a requirements.txt file to easily install all the necessary pip dependencies

- a Performance Evaluation Report in the form of a PDF file

The assignment has to be uploaded here by 23:55 on December 15th 2023. This is a HARD deadline.

If the submission does not include the Report / a Readme file, the assignment will be graded with ZERO!